[Archives] Deprecated documentation

We are moving the documentation of the Gazelle tools to this website: https://gazelle.ihe.net/gazelle-documentation/

[Deprecated] General considerations - JBoss 5

Warning: This documentation is out-dated, newest version of the documentation is available at https://gazelle.ihe.net/gazelle-documentation/General/jboss5.html

This page describes the prerequisite to the installation of Gazelle applications. All the tools developed in the context of the Gazelle testbed project are developed for JBoss (5.0.1-GA or 7.2.0.final) and use a postgreSQL database.

We recommand to install the Gazelle tools in a Debian-like environment, it's the environment running on IHE Europe servers so we know that it is correctly working. Moreover, most of the installation and configuration procedures are described for such an environment.

PostgreSQL

We are currenlty using posgreSQL 9.1 on most of our servers.

Jboss 5.0.1-GA application server

Install a JVM and Jboss AS

We usually use the Java virtual machine provided by Oracle. If you are using Ubuntu, you can process as follows:

- Add this ppa to your sources list: deb http://ppa.launchpad.net/webupd8team/java/ubuntu precise main

- Install oracle-java6-installer and oracle-java6-set-default

echo "deb http://ppa.launchpad.net/webupd8team/java/ubuntu precise main" | tee -a /etc/apt/sources.list

echo oracle-java6-installer shared/accepted-oracle-license-v1-1 boolean true | /usr/bin/debconf-set-selections

apt-key adv --keyserver keyserver.ubuntu.com --recv-keys EEA14886

apt-get update

apt-get install oracle-java6-installer oracle-java6-set-default

Then, install JBoss-5.0.1.GA, you will find a ZIP file at http://freefr.dl.sourceforge.net/project/jboss/JBoss/JBoss-5.1.0.GA/jboss-5.1.0.GA-jdk6.zip; unzip this file.

Configure the server

Create a server to host Gazelle tools: copy ${YOUR_JBOSS}/server/default directory to ${YOUR_JBOSS}/server/gazelle. Make sure to correctly set the owner and the rights of this new directory.

To secure your server, remove admin-console.war, jmx-console.war and ROOT.war from your ${YOUR_JBOSS}/server/gazelle/deploy directory.

Database driver

JBoss WS native

Two of the actors specified in the HPD integration profile are SOAP clients. The implementation of these actors uses the web service framework of Jboss named JBoss WS native. By default, when you install JBoss 5.1.0, Jboss WS native 3.1.2 is embedded. Unfortunately, this version of the module contains some bugs and we have been forced to update this framework to a more recent version: jbossws-native-3.4.0.GA. This is the most recent version of this module which is compatible with JBoss 5.1.0.GA. To upgrade JBoss WS native in your JBoss, please refer to the documentation available on Jboss’s web site:https://community.jboss.org/wiki/JBossWS-Installation

[Deprecated] General considerations - JBoss 7

Warning: This documentation is out-dated, newest version of the documentation is available at https://gazelle.ihe.net/gazelle-documentation/General/jboss7.html

This page describes the prerequisite to the installation of Gazelle applications. All the tools developed in the context of the Gazelle testbed project are developed for JBoss (5.0.1-GA or 7.2.0.final) and use a postgreSQL database.

We recommand to install the Gazelle tools in a Debian-like environment, it's the environment running on IHE Europe servers so we know that it is correctly working. Moreover, most of the installation and configuration procedures are described for such an environment.

PostgreSQL

We are currenlty using PostgreSQL 9.1 on most of our servers.

Install a JVM

Most of our applications running on JBoss7 are using java 7. Consider installing openJDK.

sudo apt-get install openjdk-7-jre

Install JBoss 7 application server

- Get package from: http://gazelle.ihe.net/jboss/jboss-as-7.2.0.Final.zip

wget -nv -O /tmp/jboss-as-7.2.0.Final.zip https://gazelle.ihe.net/jboss7/jboss-as-7.2.0.Final.zip

Be sure to use this packaged version, we provide the postgresql driver, and use different versions for modules hibernate and javassist.

- Get init script from: http://gazelle.ihe.net/jboss/init.d_jboss7

wget -nv -O /tmp/init.d_jboss7 https://gazelle.ihe.net/jboss7/init.d_jboss7

- Install jboss in the /usr/local folder

cd /usr/local sudo mv /tmp/jboss-as-7.2.0.Final.zip . sudo unzip ./jboss-as-7.2.0.Final.zip sudo ln -s jboss-as-7.2.0.Final jboss7 sudo chown -R jboss:jboss-admin /usr/local/jboss7 sudo chmod -R 755 /usr/local/jboss-as-7.2.0.Final sudo chown -R jboss:jboss-admin /var/log/jboss7/ sudo chmod -R g+w /var/log/jboss7/

- Install the init script and make it start at system startup

sudo mv /tmp/init.d_jboss7 /etc/init.d/jboss7 sudo chmod +x /etc/init.d/jboss7 sudo update-rc.d jboss7 defaults

Install JBoss 7 application server - Automated script

wget https://gazelle.ihe.net/jenkins/job/Installer_script/ws/jboss7/setup7.sh wget https://gazelle.ihe.net/jenkins/job/Installer_script/ws/jboss7/common.sh wget https://gazelle.ihe.net/jenkins/job/Installer_script/ws/jboss7/jboss7 sudo chmod +x setup7.sh sudo chmod +x common.sh sudo chmod +x jboss7 ./setup7.sh

[Deprecated] DDS - Demographic Data Server

Warning: This documentation is out-dated, newest version of the documentation is available at https://gazelle.ihe.net/gazelle-documentation

Click to here to enter the Demographic Data Server

The demographic Data Server is a tool that generates random demographic informations, and make them available for tools to use for testing purpose.

Gazelle DDS use cases

The Demographic Data Server tries to respond to the following use cases :

- Generate realistic data set to fill out a database with data for testing purpose.

- Request data on the demand through web services

- Transfert data through HL7 V2 or HL7 V3 messages (using multibyte character encoding when necessary)

- Support for different kind of character encoding.

- Support for many languages and countries

- Usage through web interface (for humans) or web services (for machines)

For more details about the various functionnalities of the DDS Tool, visit the following links.

- Log in to post comments

[Deprecated] DDS - User Manual for user interface

Warning: This documentation is out-dated, newest version of the documentation is available at https://gazelle.ihe.net/gazelle-documentation

Overview of the Demographic Data Server (DDS) project

When running a test we often need to inject demographic data. The aim of the tool is to generate the necessary data. Generated data are fictious (not real) but looks like real data. Demographic characteristics consist of

-

a name, last name, mother maiden name ...

-

date of birth,

-

sex,

-

religion,

-

race,

-

address

- etc ...

The address consists of the street, town, state, zip code and country. Addresses are randomly generated. We use the geonames database and googlemaps geocoding webservices in order to generate random addresses or more specific research. Generated addresses contains zip code information, matching the city name. Currently generated demographic information can be generated for the United-States, France, Germany and Japan. We are working on including data for more countries. Demographic Dataserver is taking into account information about the frequency of firstname, lastname, race and religion. The Demographic Data Server provides a Web User interface as well as a Web Service interface.

The java documentation of this project is available here.

DDS Web User interface

User can access to DDS using the DDS web page. The GUI offers the possibility to generate patient data, see all generated patient data and shre this patient data with other systems in HL7 v2 or v3.

How to create a new patient data ?

To create a new patient, go to the ![]() menu. In the create patient page, user will have the choice between to tabs : "Patient's generation" and "Advances Patient's generation".

menu. In the create patient page, user will have the choice between to tabs : "Patient's generation" and "Advances Patient's generation".

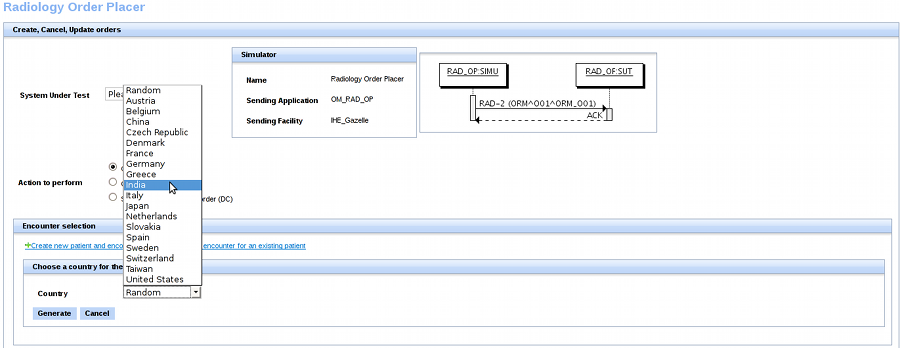

- In the first tab, "Patient's generation", the user will be allow to create patient information by selecting the country

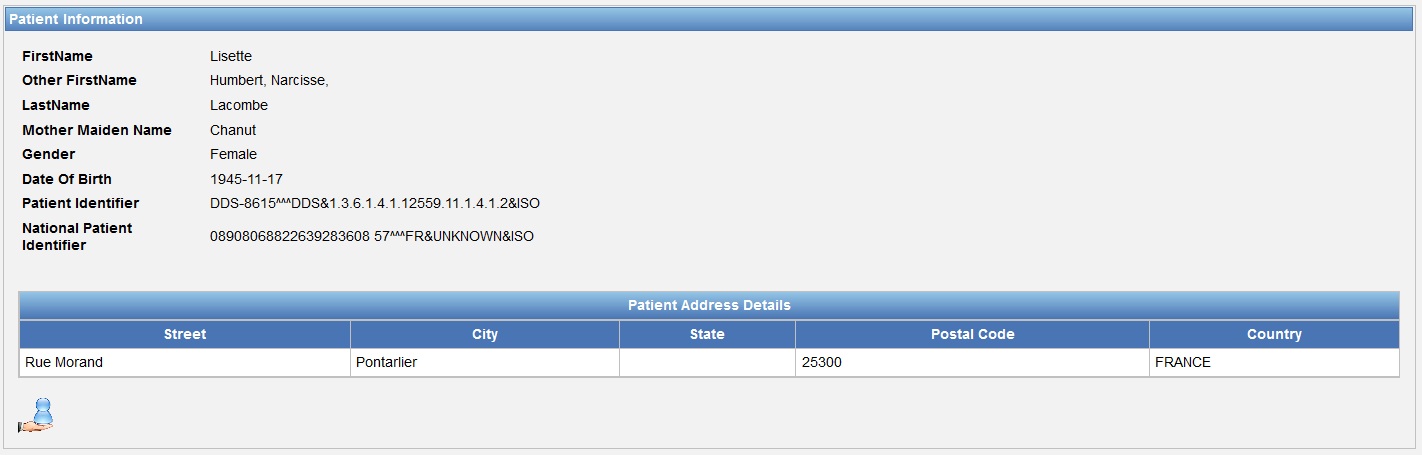

. Once the country has been selected, hit the "Generate Demographic Data" button. The generated patient will have basic information (last name, first name, other firstname, mother maiden name, gender, data of birth, patient identifier in DDS, National Patient Identifier and one address). It is possible that some of this information are missing. At the bottom of the Patient Information panel, the

. Once the country has been selected, hit the "Generate Demographic Data" button. The generated patient will have basic information (last name, first name, other firstname, mother maiden name, gender, data of birth, patient identifier in DDS, National Patient Identifier and one address). It is possible that some of this information are missing. At the bottom of the Patient Information panel, the  button can be used to send patient (with HL7 v2 or v3 messages) to the user system. Go to the next part below to send a patient with DDS. See below, one example of a generated patient :

button can be used to send patient (with HL7 v2 or v3 messages) to the user system. Go to the next part below to send a patient with DDS. See below, one example of a generated patient :

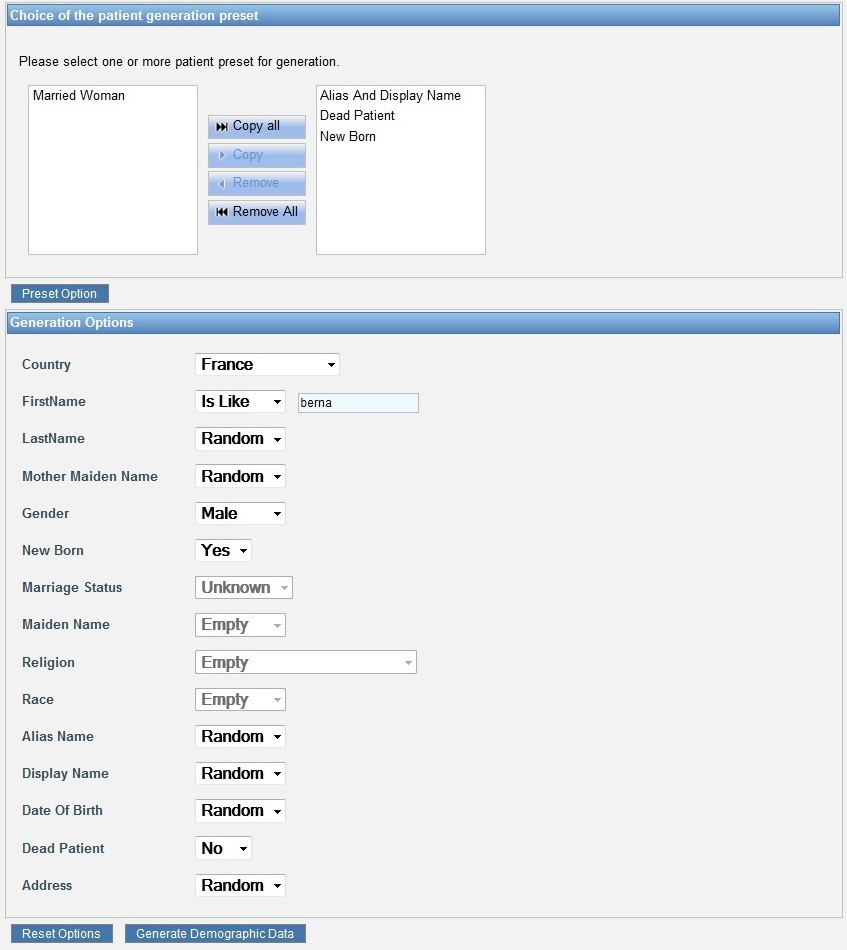

- In the second tab, "Advances Patient's generation", the user will be allow to create advanced patient information by using a lot of criteria. Criteria are all in the "Generation Options" panel. Once the user has set option, just hit the

button to generate patient information. DDS offers to the user to Preset the generation option according to the selected patient preset(s). For that, select the preset in the left panel, and hit the

button to generate patient information. DDS offers to the user to Preset the generation option according to the selected patient preset(s). For that, select the preset in the left panel, and hit the  button. Once you have selected all desire presets (selected preset are in the right panel), hit the

button. Once you have selected all desire presets (selected preset are in the right panel), hit the  button to preset the option according to the selected preset(s). Then, hit the

button to preset the option according to the selected preset(s). Then, hit the  button. (You can select more than one preset at the same time). For example, if user choose the "Dead Patient" preset, the "Dead Patient" option will be set to "Yes". This functionnality allows the user to quickly set the generation option. See below the result of a generated patient information.

button. (You can select more than one preset at the same time). For example, if user choose the "Dead Patient" preset, the "Dead Patient" option will be set to "Yes". This functionnality allows the user to quickly set the generation option. See below the result of a generated patient information.

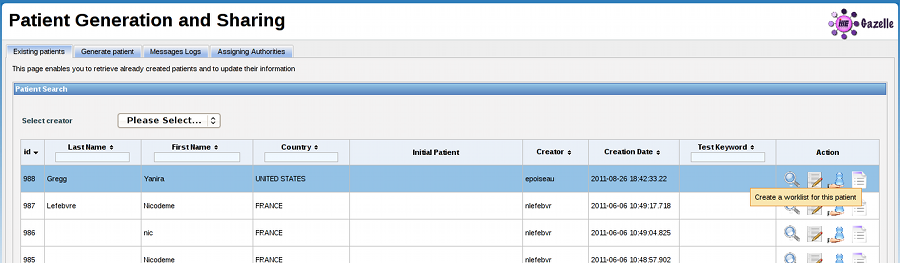

How to consult existing patient data ?

To see all patient data generated by DDS, go to the ![]() menu. This page show to the use, in a table, all patient data generated by DDS. User can use the FirstName and the LastName filters to search a specific patient. It is also possible to sort the patient data by Id (Id in DDS), FirstName, LastName, Gender, Race, Religion ... by hiting the

menu. This page show to the use, in a table, all patient data generated by DDS. User can use the FirstName and the LastName filters to search a specific patient. It is also possible to sort the patient data by Id (Id in DDS), FirstName, LastName, Gender, Race, Religion ... by hiting the ![]() button.

button.

In the action column :

- The

button allows user to see the patient data in a pop-up.

button allows user to see the patient data in a pop-up. - The

button allows user to add the selected patient in the selected patient list. This list can be used to share patient. User can add to this list many patients as he wants.

button allows user to add the selected patient in the selected patient list. This list can be used to share patient. User can add to this list many patients as he wants.

Finally, just below the patient data table, the user could find all patients of the selected patient list :

- The

button can be used to share all patient data of the selected patient list.

button can be used to share all patient data of the selected patient list. - The

button can be used to reset the selected patient list.

button can be used to reset the selected patient list.

The ![]() button (over the patient table) can be used to refresh the patients list of the patient data table.

button (over the patient table) can be used to refresh the patients list of the patient data table.

How to share patient data ?

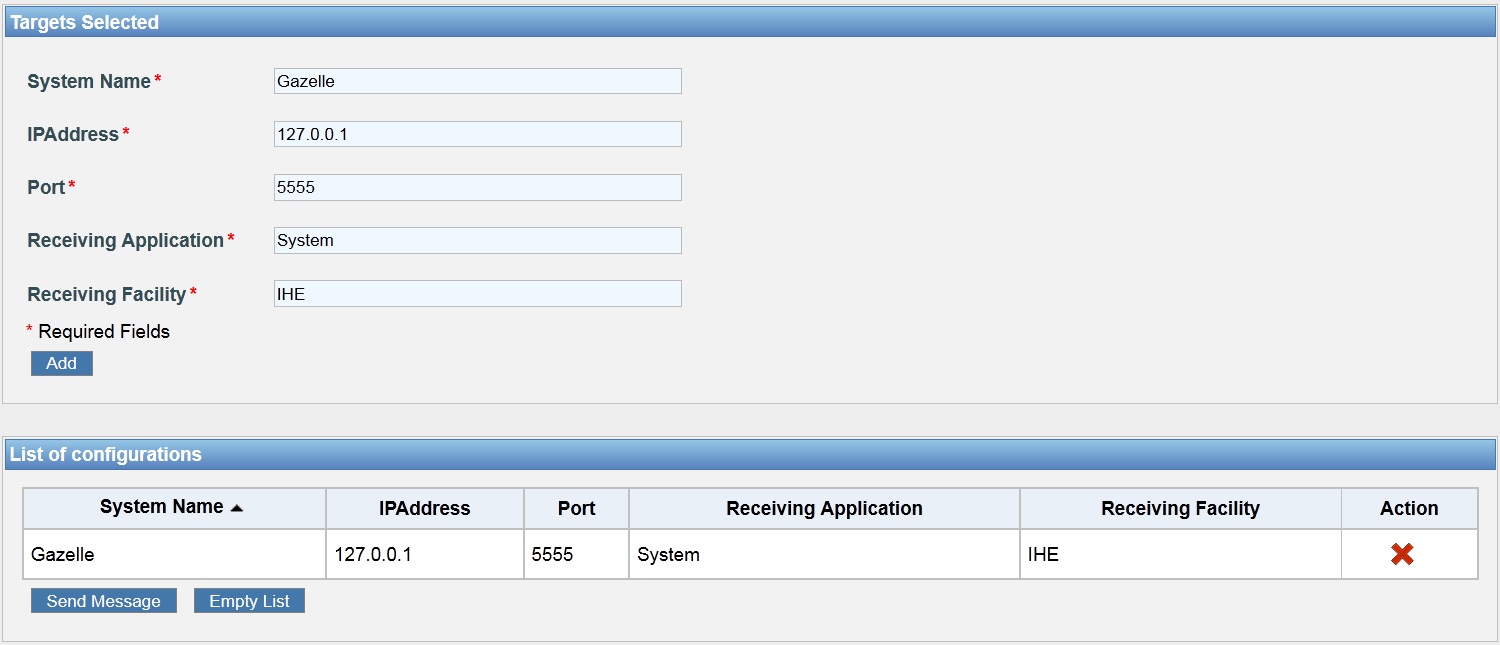

The GUI allows the user to send the selected patients through an HL7 V2 or V3 messages. Once user has selected the patients to send to his system (see the section over), it is necessary to configure the sending option :

- User must select the HL7 message type and version. Four options are available, see the screenshot below.

- Once the message typas has been seleted, the user must choose the character set encoding to use (only available for the HL7 version 2). The list of character set encoding depends on the country of the patients to send.

- Then, the user must fill the Targets Selected fields. This information are relative to the user system which will receive the patient data. It is possible to send the patient data to several system at the same time. See the example below, for HL7 v2. Hit the Add button to add the configuration to the list of configuration. Be careful, your target must have a port open on the internet. Before sending messages to your system, ensure that your firewall options give to DDS the access to your system.

- Finally, hit the

button to send all selected patient data to the configuration(s). A summury of exchanges will appear just below the configuration panel. For each message, you can hit the

button to send all selected patient data to the configuration(s). A summury of exchanges will appear just below the configuration panel. For each message, you can hit the  button to see the full sent message content or the full acknowlegdment message content. See the example below.

button to see the full sent message content or the full acknowlegdment message content. See the example below.

Web Services Interface

The WSDL file describing the web service is here. You can also download an example of a project soapUI that use these methods from here.

Functionalities

Functionalities of DDS can be used by web services methods. In fact, DDS implement 7 methods on web service :

- returnAddress : generate random address from the country code

- returnAddressByCoordinates : generate a specific address from country code and coordinates

- returnAddressBtTown : generate a specific address from a town

- returnHL7Message : generate HL7 message on format XML from a country code

- returnPatient : generate a patient from a country code. We can restrict generation on same persons by specifying attributes like the gender, or on specifying a nearby name or a part of the name

- returnPatientWithAllOptions : generate a patient from a country code. All options to generate a patient are available here.

- returnPerson : generate a person without address, using the same attributes of generation of a patient

- sendHL7Message : generate and send a HL7 message to a target host and port.

The documentation of classes on this jar is there.

Web Services Limitation

We do not have limited ressources to offer for this service. Thus the access to the webservice is limited to a "reasonnable" number of request per days/minute. We'd like to avoid DoS on the gazelle tools because someone is requesting fake patients every second.

Thus our limitation are :

- No more than 30 requests per IP address per minute

- No more than 3000 requests per period of 24 hours per IP address

If you'd like to generate large random data, please get in contact with Eric Poiseau and we will try to help you and generate data to fill your needs.

[Deprecated] DDS - User Manual for Web Services

Web Services Interface

The WSDL file describing the web service is here. You can also download an example of a project soapUI that use these methods from here.

Functionalities

Functionalities of DDS can be used by web services methods. In fact, DDS implement 12 methods on web service. See the table below to have further information :

|

Method Name |

Description |

Parameter Name |

Possible Values |

|

getListCountryCode |

Return all available country code. |

No parameter. | |

|

returnAddress |

Generate a specific address from country code. |

countryCode : The code of the country used to generate the Patient. |

For example : JP, FR, DE, US, ... (To know all possible values, see the ISO code of each country. Use the getListCountryCode method.) |

|

returnAddressByCoordinates |

Generate a specific address from country code and coordinates. |

countryCode : The code of the country used to generate the Patient. |

For example : JP, FR, DE, US, ... (To know all possible values, see the ISO code of each country. Use the getListCountryCode method.) |

|

lat : The lattitude of the place from which we extract the address. |

For example : 53.5459481506 |

||

|

lng : the longitude of the place from which we extract the address. |

For example : 10.204494639 |

||

|

returnAddressByTown |

Generate a specific address from a Town. |

townName : The name of the town. |

For example : Paris, London, Toronto, Roma, ... |

|

returnPerson |

Return a Person from some parameters. |

countryCode : The code of the country used to generate the Patient. |

For example : JP, FR, DE, US, ... (To know all possible values, see the ISO code of each country. Use the getListCountryCode method.) |

|

lastNameOption : Specify if you want to generate the last name or not. |

true or false |

||

|

firstNameOption : Specify if you want to generate the first name or not. |

true or false |

||

|

motherMaidenNameOption : Specify if you want to generate the mother maiden name or not. |

true or false |

||

|

religionOption : Specify if you want to generate the religion of the person or not. |

true or false |

||

|

raceOption : Specify if you want to generate the race of the person or not. |

true or false |

||

|

birthDayOption : Specify if you want to generate the birth day of the person or not. |

true or false |

||

|

genderDescription : Specify the gender of the person. |

Male, Female, male, female, m, M, f, F or Random. For other value, the gender will be generate randomly. |

||

|

firstNameLike : Specify it if you want to get a first name approaching the specified first name.(Attention, you have to choose between the firstNameLike and the firstNameIs.) |

For example : Nico, Dav, ... |

||

|

lastNameLike : Specify it if you want to get a last name approaching the specified last name. (Attention, you have to choose between the lastNameLike and the lastNameIs.) |

For example : Jam, lef, ... |

||

|

firstNameIs : Specify it if you want to get a person with this exact first name. (Attention, you have to choose between the firstNameLike and the firstNameIs.) |

For example : Nicolas |

||

|

lastNameIs : Specify it if you want to get a person with this exact first name. (Attention, you have to choose between the lastNameLike and the lastNameIs.) |

For example : James |

||

|

returnSimplePatient |

Return a simpl patient from a specific country. |

countryCode : The code of the country used to generate the Patient. |

For example : JP, FR, DE, US, ... (To know all possible values, see the ISO code of each country. Use the getListCountryCode method.) |

|

returnPatient |

Return a Patient from some parameters. |

countryCode : The code of the country used to generate the Patient. |

For example : JP, FR, DE, US, ... (To know all possible values, see the ISO code of each country. Use the getListCountryCode method.) |

|

lastNameOption : Specify if you want to generate the last name or not. |

true or false |

||

|

firstNameOption : Specify if you want to generate the first name or not. |

true or false |

||

|

motherMaidenNameOption : Specify if you want to generate the mother maiden name or not. |

true or false |

||

|

religionOption : Specify if you want to generate the religion of the person or not. |

true or false |

||

|

raceOption : Specify if you want to generate the race of the person or not. |

true or false |

||

|

birthDayOption : Specify if you want to generate the birth day of the person or not. |

true or false |

||

|

genderDescription : Specify the gender of the person. |

Male, Female, male, female, m, M, f, F or Random. For other value, the gender will be generate randomly. |

||

|

firstNameLike : Specify it if you want to get a first name approaching the specified first name.(Attention, you have to choose between the firstNameLike and the firstNameIs.) |

For example : Nico, Dav, ... |

||

|

lastNameLike : Specify it if you want to get a last name approaching the specified last name. (Attention, you have to choose between the lastNameLike and the lastNameIs.) |

For example : Jam, lef, ... |

||

|

firstNameIs : Specify it if you want to get a person with this exact first name. (Attention, you have to choose between the firstNameLike and the firstNameIs.) |

For example : Nicolas |

||

|

lastNameIs : Specify it if you want to get a person with this exact first name. (Attention, you have to choose between the lastNameLike and the lastNameIs.) |

For example : James |

||

|

returnPatientWithAllOptions |

The most complete method to return a patient. A lot of parameters are available. |

countryCode : The code of the country used to generate the Patient. |

For example : JP, FR, DE, US, ... (To know all possible values, see the ISO code of each country. Use the getListCountryCode method.) |

|

lastNameOption : Specify if you want to generate the last name or not. |

true or false |

||

|

firstNameOption : Specify if you want to generate the first name or not. |

true or false |

||

|

motherMaidenNameOption : Specify if you want to generate the mother maiden name or not. |

true or false |

||

|

religionOption : Specify if you want to generate the religion of the person or not. |

true or false |

||

|

raceOption : Specify if you want to generate the race of the person or not. |

true or false |

||

|

birthDayOption : Specify if you want to generate the birth day of the person or not. |

true or false |

||

|

addressOption : Specify if you want to generate the address of the patient or not. |

true or false |

||

|

genderDescription : Specify the gender of the person. |

Male, Female, male, female, m, M, f, F or Random. For other value, the gender will be generate randomly. |

||

|

firstNameLike : Specify it if you want to get a first name approaching the specified first name.(Attention, you have to choose between the firstNameLike and the firstNameIs.) |

For example : Nico, Dav, ... |

||

|

lastNameLike : Specify it if you want to get a last name approaching the specified last name. (Attention, you have to choose between the lastNameLike and the lastNameIs.) |

For example : Jam, lef, ... |

||

|

firstNameIs : Specify it if you want to get a person with this exact first name. (Attention, you have to choose between the firstNameLike and the firstNameIs.) |

For example : Nicolas |

||

|

lastNameIs : Specify it if you want to get a person with this exact first name. (Attention, you have to choose between the lastNameLike and the lastNameIs.) |

For example : James |

||

|

maritalSatusOption : Specify the marital status of the patient. |

Possible values are : Married or M, Single or S, Divorced or D, Unknown or U, Random or R. |

||

|

deadPatientOption : Specify if you want to generate a dead patient or not. If yes, the date of patient death will be randomly find. |

true or false |

||

|

maidenNameOption : Specify if you want to generate a maiden name for the patient or not. Attention, the maiden name can't be generate if the patient gender is not female. |

true or false |

||

|

aliasNameOption : Specify if you want to generate an alias name for the patient or not. |

true or false |

||

|

displayNameOption : Specify if you want to generate a display name for the patient or not. |

true or false |

||

|

newBornOption : Specify if you want to generate a new born patient or not. If yes, the patient will have a mother and the patient's age will be between 1 and 2 days. If the new born option is true, the marital status must be set to 'Unknown' or 'U', because a new born can't be married or divorced. |

true or false |

||

|

returnHl7Message |

Return HL7 v2 Message containing description of a patient from a specific country. |

||

|

countryCode : The code of the country used to generate the Patient. |

For example : JP, FR, DE, US, ... (To know all possible values, see the ISO code of each country. Use the getListCountryCode method.) |

||

|

receivingApplication : The Application of your system. (MSH-5) |

See the IHE Technical Framework for more information about this field. |

||

|

receivingFacility : The Facility of your system. (MSH-6) |

See the IHE Technical Framework for more information about this field. |

||

|

characterSet : The character set encoding of the HL7 message. (MSH-18) |

Possible values : It depends of each country. For example, for France, all available characters set are : UTF-8 and ISO-8859-1. If you ask for a character set which is not supported by DDS, DDS will return a SOAPException with a message to show you all possible characters set. |

||

|

hl7Version : The HL7 version of the message. (MSH-12) |

Possible values : 2.3.1 or 2.5 |

||

|

messageType : The message type of the HL7 message. (MSH-9) |

Possible values : ADT^A01^ADT_A01, ADT^A04^ADT_A01 or ADT^A28^ADT_A05. The ADT^A28^ADT_A05 is only available with the HL7 v2.5 version and the ADT^A01^ADT_A01 and ADT^A04^ADT_A01 with the HL7 v2.3.1 version. |

||

|

sendHl7Message |

Send HL7 v2 Message containing description of a patient from a specific country to a target host and port. This method return the message response.

|

||

|

countryCode : The code of the country used to generate the Patient. |

For example : JP, FR, DE, US, ... (To know all possible values, see the ISO code of each country get with the getListCountryCode method.) |

||

|

targetHost : The IP Address of your system, which will receive the HL7 message. |

Example : 137.114.220.XXX |

||

|

targetPort : The port on which your system will receive the HL7 message. |

Example : 1030 |

||

|

receivingApplication : The Application of your system. (MSH-5) |

See the IHE Technical Framework for more information about this field. |

||

|

receivingFacility : The Facility of your system. (MSH-6) |

See the IHE Technical Framework for more information about this field. |

||

|

characterSet : The character set encoding of the HL7 message. (MSH-18) |

Possible values : It depends of each country. For example, for France, all available characters set are : UTF-8 and ISO-8859-1. If you ask for a character set which is not supported by DDS, DDS will return a SOAPException with a message to show you all possible characters set. |

||

|

hl7Version : The HL7 version of the message. (MSH-12) |

Possible values : 2.3.1 or 2.5 |

||

|

messageType : The message type of the HL7 message. (MSH-9) |

Possible values : ADT^A01^ADT_A01, ADT^A04^ADT_A01 or ADT^A28^ADT_A05. The ADT^A28^ADT_A05 is only available with the HL7 v2.5 version and the ADT^A01^ADT_A01 and ADT^A04^ADT_A01 with the HL7 v2.3.1 version. |

||

|

returnHl7v3Message |

Return HL7 v3 Message containing description of a patient from a specific country. |

countryCode1 : The code of the country used to generate the Patient. |

For example : JP, FR, DE, US, ... (To know all possible values, see the ISO code of each country. Use the getListCountryCode method.) |

|

sendHl7v3Message |

Send HL7 v3 Message containing description of a patient from a specific country to URL. |

countryCode : The code of the country used to generate the Patient. |

For example : JP, FR, DE, US, ... (To know all possible values, see the ISO code of each country. Use the getListCountryCode method.) |

|

systemName : The name of your system. |

The name of your system. |

||

|

url : The URL of your system. |

The URL of your system. |

||

|

receivingApplication : The Application of your system. |

See the IHE Technical Framework for more information about this field. |

||

|

receivingFacility : The Facility of your system. |

See the IHE Technical Framework for more information about this field. |

Static WS Client for DDS

We have implemented a Static WSClient for DDS. This related jar is easy to use. You have only to add the jar file to the project, and use it. The jar file is downloadable here (on the Gazelle Maven repository).

Web Services Limitation

We do not have limited ressources to offer for this service. Thus the access to the webservice is limited to a "reasonnable" number of request per days/minute. We'd like to avoid DoS on the gazelle tools because someone is requesting fake patients every second.

Thus our limitation are :

- No more than 30 requests per IP address per minute

- No more than 3000 requests per period of 24 hours per IP address

If you'd like to generate large random data, please get in contact with Eric Poiseau and we will try to help you and generate data to fill your needs.

You can allow specific IPs to do not have limited ressources.

To do this you need to update database with this kind of request :

UPDATE dds_user_request_historic SET unlimited=true WHERE ip_address='62.212.122.29';

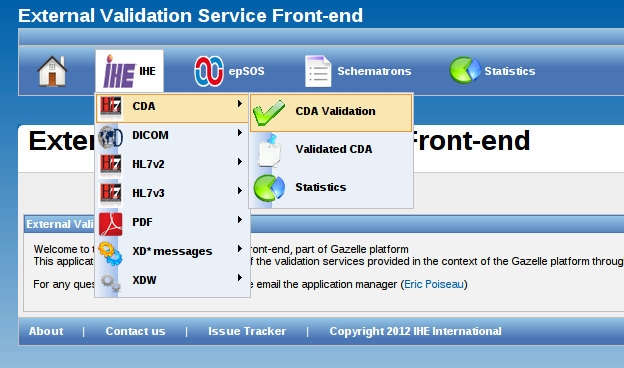

[Deprecated] EVSClient - Installation & Configuration

Warning: This documentation is out-dated, newest version of the documentation is available at https://gazelle.ihe.net/gazelle-documentation

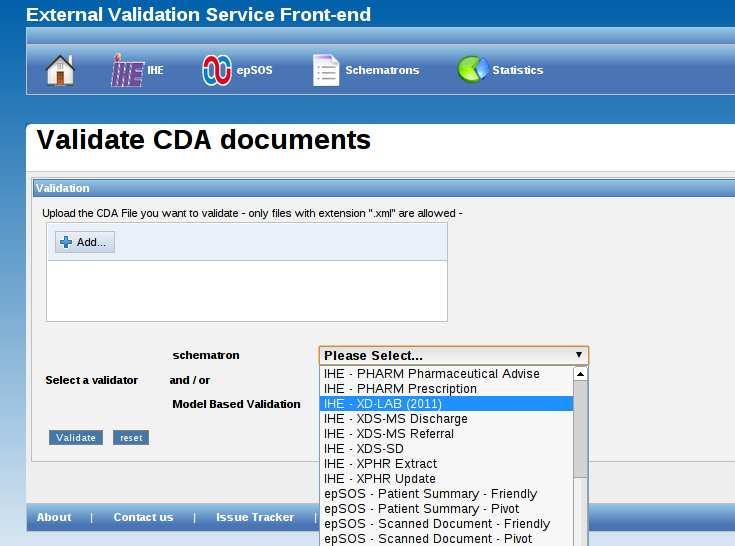

External Validation Service Front-end is a maven project which calls several the web services exposed by the Gazelle tools to validate messages and documents. It may also be plugged to other validation services.

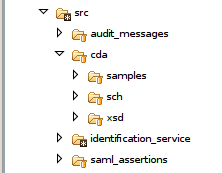

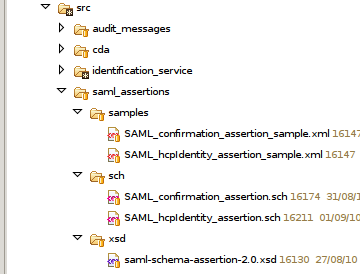

Sources

Sources of this project are available on the INRIA Forge; sources are managed using SVN. An anonymous access is available if you only want to checkout the sources (read-only access). If you intent to build the tool and to install it on your own server, we recommand to use a tagged version; not the trunk which is the development branch.

svn co https://scm.gforge.inria.fr/svn/gazelle/Maven/EVSClient/tags/EVSClient-version

To retrieve the current version of the tool, consult the release notes of the project in Jira.

Before compiling the application for the first time, you might want have to update the pom.xml file of the parent project (EVSClient) in order to configure the database connection.

Maven artifact

Each version of the tool is published in our Nexus repository, download the latest release from here. Be carreful, this released artifact is configured to connect to a database named evs-client-prod and owned by user gazelle.

Installation

Read general considerations section of the installation guides to learn about JBoss application server and postgreSQL database.

Once you have retrieved the archive, copy it to your JBoss server in the deploy directory. Be carreful, the file copied in this folder shall be exactly named EVSClient.ear.

cp EVSClient-ear-3.1.0.ear /usr/local/jboss/server/${YOUR_SERVER}/deploy/EVSClient.ear

Users of the EVSClient tool will upload files to be validated on the server, those files are stored in the file system in specific directories. Only the root of the main directory and be configured in the database. Under debian-like systems, we usually store those files at /opt/EVSClient_prod. A ZIP file is available on the Forge that you can unzip in order to easily create all the required directories, starting at /opt.

wget -nv -O /tmp/EVSClient-dist.zip "http://gazelle.ihe.net/jenkins/job/EVSClient-RELEASE/ws/EVSClient-ear/target/EVSClient-dist.zip"

unzip /tmp/EVSClient-dist.zip -d /

To finalize the installation, you must run the script which initialize the application preferences. A SQL script is available here, edit it and check its content before running it.

In order to take into account the new preferences, the application SHALL be restarted:

touch /usr/local/jboss/server/${YOUR_SERVER}/deploy/EVSClient.ear

Configuration

The application databse is : evs-client-prod.

The application uses its database to store:

- The application preferences

- The references to the standard/integration profiles that the user will be able to validate against

- The validation services which are available in the tool

- The topbar menu

- The link to the tools which forwards messages/documents to the EVSClient for validation and might query the EVSClient for the result

The following sections explain how to configure the tool.

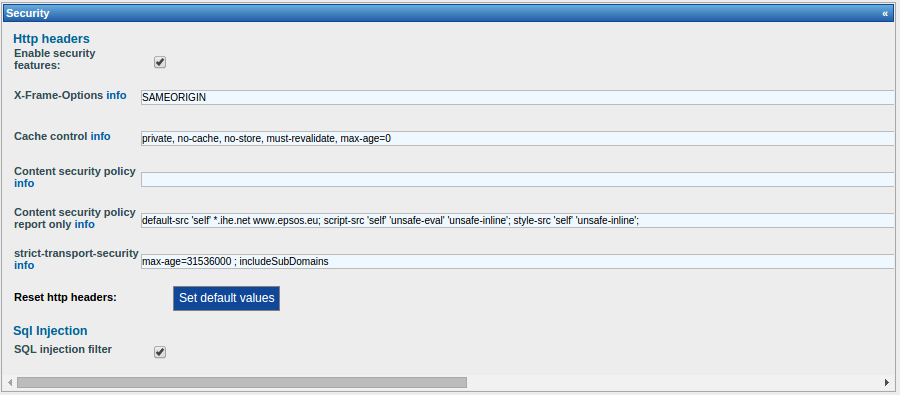

Application preferences

Users with admin_role role can access the application preference section through the menu Administration --> Manage application preferences.

The table below summarizes the preferences which are used by the tool along with their description and default value.

| Variable | Default value | Description |

| application_database_initialization_flag | database_successfully_initialized | Indicates that the DB has been initialized |

| application_url | http://localhost:8080/EVSClient | URL to reach the tool |

| cas_enabled | false | Indicates authentication mechanism to use |

| ip_login | true | Indicates authentication mechanism to use |

| ip_login_admin | .* | Pattern to grant users as admin based on their IP address |

| cas_url | Not defined | URL of the CAS service |

| time_zone | Europe/Paris | Time zone to display time to users |

| atna_repository | /opt/EVSClient_prod/validatedObjects/ATNA | Where to store ATNA messages |

| cda_repository | /opt/EVSClient_prod/validatedObjects/CDA | Where to store CDA documents |

| dicom_repository | /opt/EVSClient_prod/validatedObjects/DICOM | Where to store DICOM files |

| dicom_scp_screener_xsl | dicom/TestDicomResults.xsl | XSL used to display Dicom SCP Screener results |

| display_SCHEMATRON_menu | false | Indicates if we need a link to the list of schematrons for download |

| dsub_repository | /opt/EVSClient_prod/validatedObjects/DSUB | Where to store DSUB files |

| epsos_repository_codes | /opt/EVSClient_prod/bin/EpsosRepository | path to epsos codes for epsos-cda-display-tool |

| gazelle_hl7v2_validator_url | http://gazelle.ihe.net/GazelleHL7Validator | Path to Gazelle HL7 Validator |

| hl7v3_repository | /opt/EVSClient_prod/validatedObjects/HL7v3 | Where to store HL7v3 messages |

| hpd_repository | /opt/EVSClient_prod/validatedObjects/HPD | Where to store HPD messages |

| include_country_statistics | true | Authorize or not the application to query geoip to retrieve the countries the users are from |

| monitor_email | test@test.com | Contact of the person who monitors the application |

| number_of_segments_to_display | 40 | Number of segment to display when displaying HL7v2 messages |

| object_for_validator_detector_repository | /opt/EVSClient_prod/validatedObjects/validatorDetector | path to the repository where object for validator_detector are stored |

| pdf_repository | /opt/EVSClient_prod/validatedObjects/PDF | Where to store PDF files |

| root_oid | 1.2.3 | Root of the OID used to uniquely identify validation requests |

| saml_repository | /opt/EVSClient_prod/validatedObjects/SAML | Where to store SAML assertions |

| svs_repository | /opt/EVSClient_prod/validatedObjects/SVS | Where to store SVS messages |

| tls_repository | /opt/EVSClient_prod/validatedObjects/TLS | Where to store certificates |

| xds_repository | /opt/EVSClient_prod/validatedObjects/XDS | Where to store XDS messages |

| xdw_repository | /opt/EVSClient_prod/validatedObjects/XDW | Where to store XDW messages |

| application_admin_email | contact@evsclient.net | Contact of the person responsible for the application |

| application_admin_name | contact | Person responsible for the application |

| application_issue_tracker_url | http://gazelle.ihe.net/browse/EVSCLT | URL of the project in the issue tracking system |

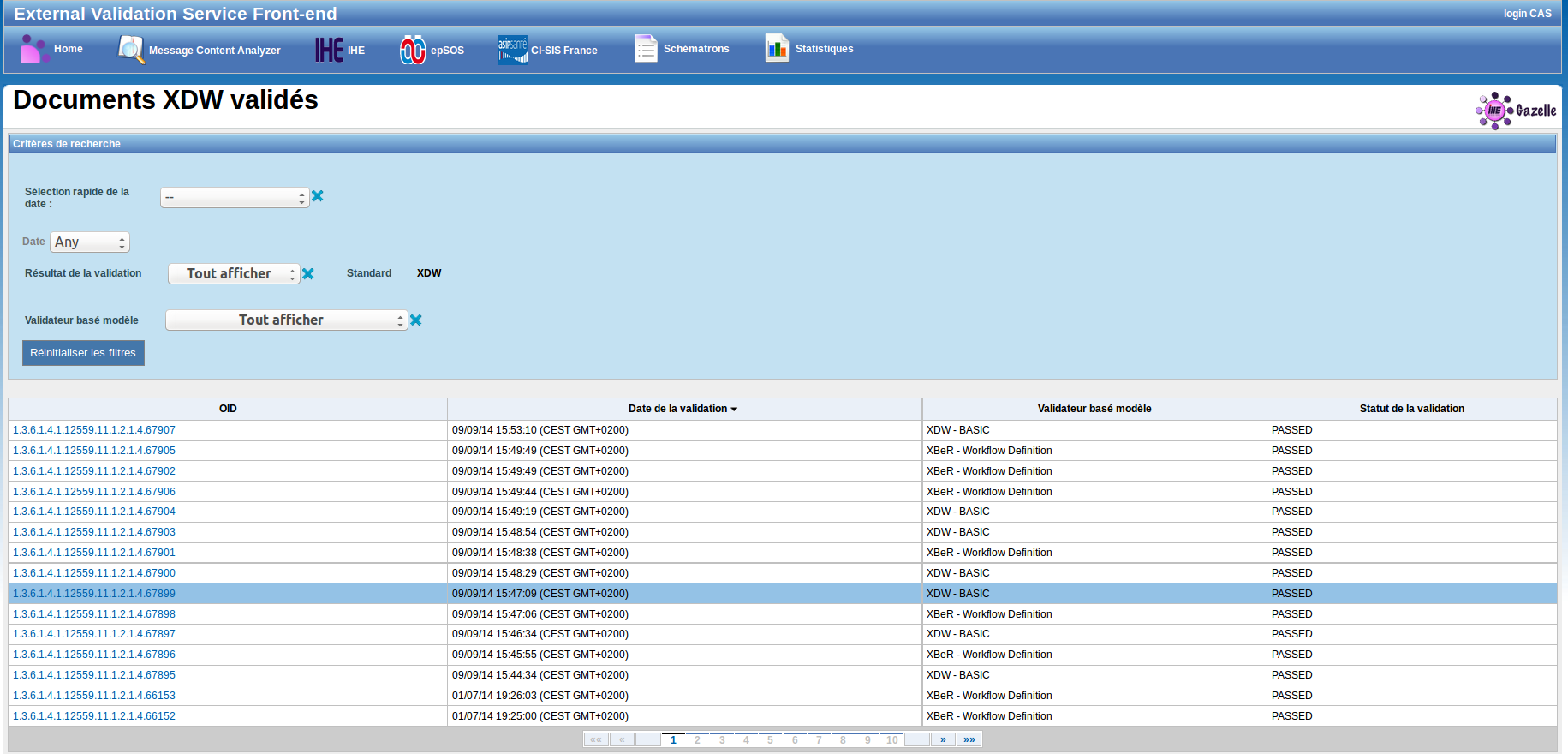

References to standards

What we call a referenced standard in the EVS Client tool is an entry which indicates the underlying standard or integration profile implemented by the system which produces the documents and/or messages that the tool is able to validate. We use this concept to structure both the Java code and the graphical user interface.

A referenced standard is defined by a name, optionaly a version and an extension. Then each entry in the database is given a unique label and we can also provide a name to be displayed to the user in the drop-down menus and a description explaining what is the standard and what the tool is going to validate.

Note that a pre-defined list of standard names is available and matches the standard for which a validation service client has been implemented within the tool.

Administrators will access the section of the tool which enables the configuration of the standards from Administration --> Manage referenced standards. This page lists the standards already defined within the tool. You can edit them or add new ones.

When you create a new standard, make sure you use a unique label. In addition, check the spelling of the extension, it might be used by the tool to query for the list of available validation methods. Note that you can also provide a link to the image to be used in the drop-down menus. For XML-based documents/messages, you can provide the list of the XML stylesheets to use to nicely display the document/message to the user.

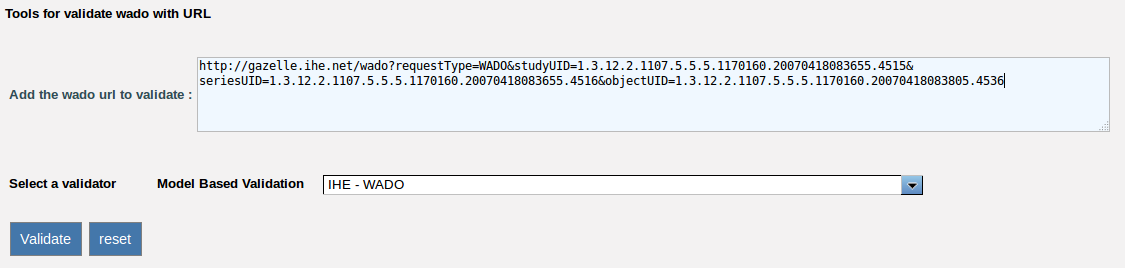

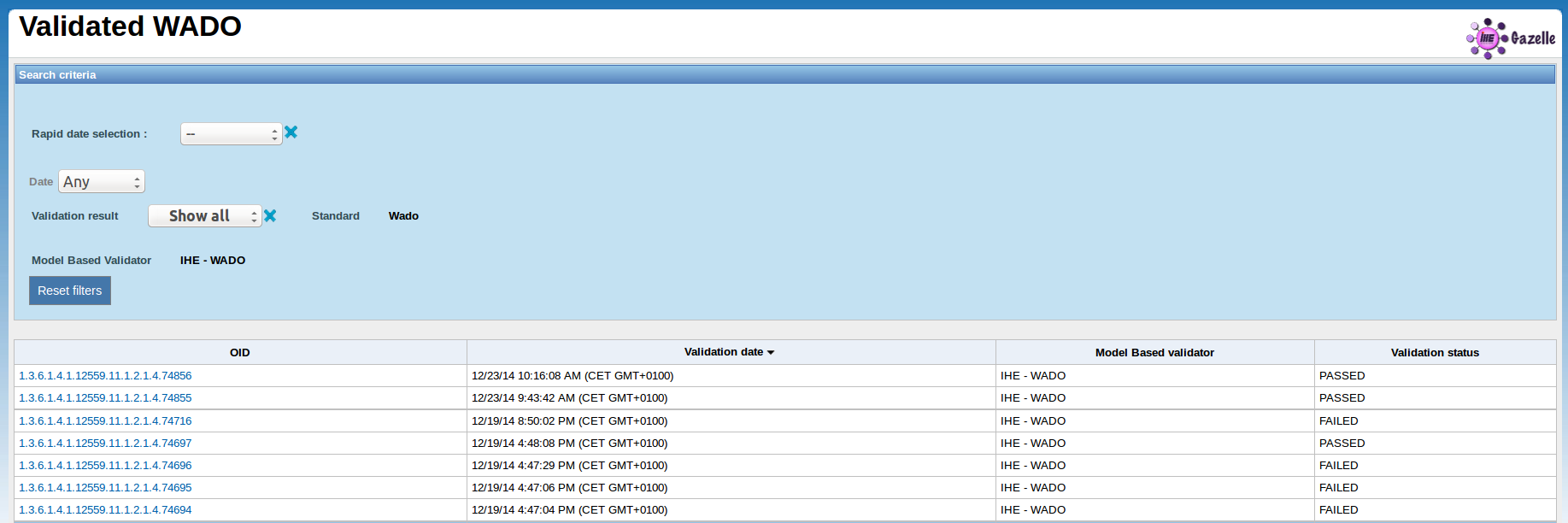

Currently available standards are HL7v2, HL7v3, CDA, TLS (stands for certificates), DSUB (metadata), XDS (metadata), XDW (metadata), HPD (messages), SVS (messages), WADO (requests), DICOM, SAML (assertions), ATNA (Audit messages).

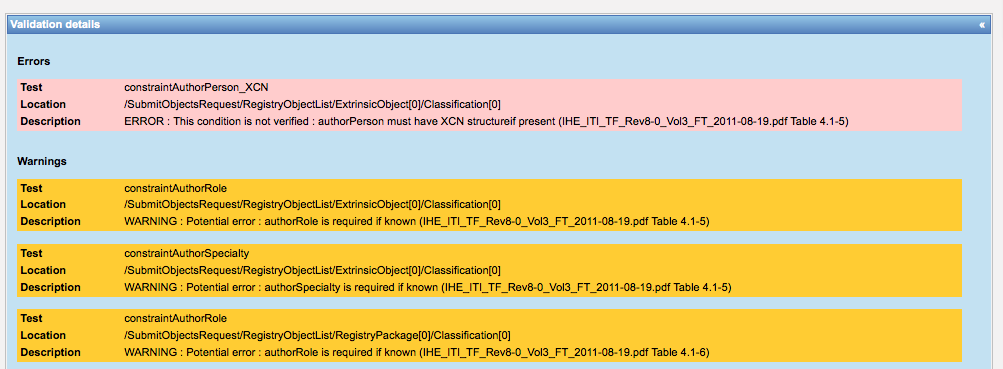

Validation services

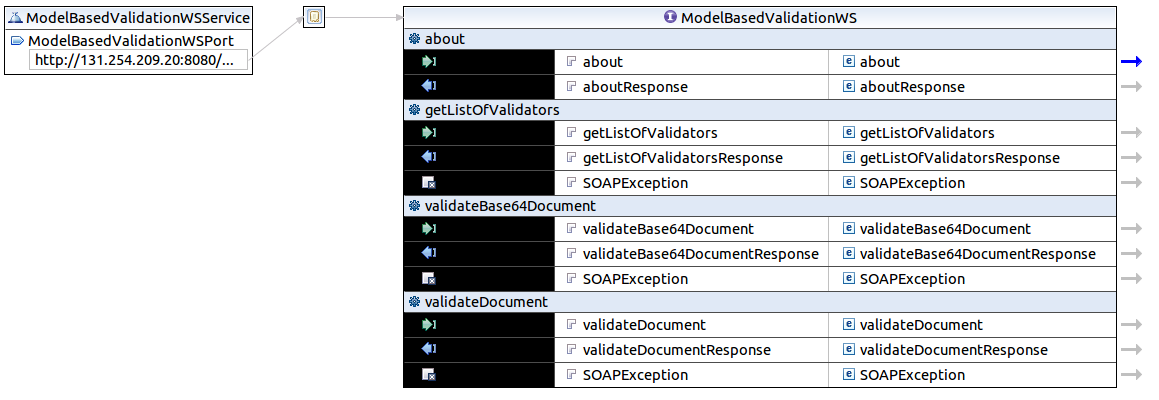

A validation service in the context of the EVSClient tool is either a web service exposed by another tool or a binary executed directly on the server or even a JAR library called by the tool. An entity has been created in the tool to store all the information about those services. It makes easier the management of the services and allows a different configuration depending on the location of the EVSClient tool.

Going to Adminisration --> Manage validation services, the administrator will access the list of all the validation services which are declared and used by the application. Each entry can be edited. You can also create new entries.

When defining a validation service you need to provide:

- A name and a unique kewyord

- The validation engine (mainly used for validation of XML-based messages/documents; in that case SHALL be schematron or model-based in order to indicate to the tool which client stub to use)

- The link to the stylesheet used to display the result (validation service might return results as an XML string)

- The endpoint to reach the service (may also be a path to a binary on your system)

- You can also indicate if the validation service is available to the user or not

- The list of standards to which this validation service applies.

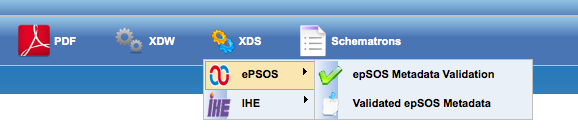

Configuring the top bar menu

A menu bar is made of two kind of entities, the menu groups which are the menu entries displayed in the top bar and the menu entries which are the entries displayed in the drop-down list. The top bar menu of EVSClient is built using a list of menu groups stored in the database. Administrator users can update this list from the page accessible at Administration --> Menu configuration. On this page are lists all the menu groups defined for this instance of the tool.

A menu group is defined by:

- a label and an icon (path to the icon can be relative to the application or can be an external reference)

- its order on the page

- the list of referenced standards which will be available from this menu group

- a boolean which indicates whether or not displaying the menu group

For each standard listed in the menu group, the icon will be the one defined at standard level. For each menu (except for DICOM) the sub menus will be "validate", "validation logs" and "statistics". Links to these sections are automatically built from the standard name and extension.

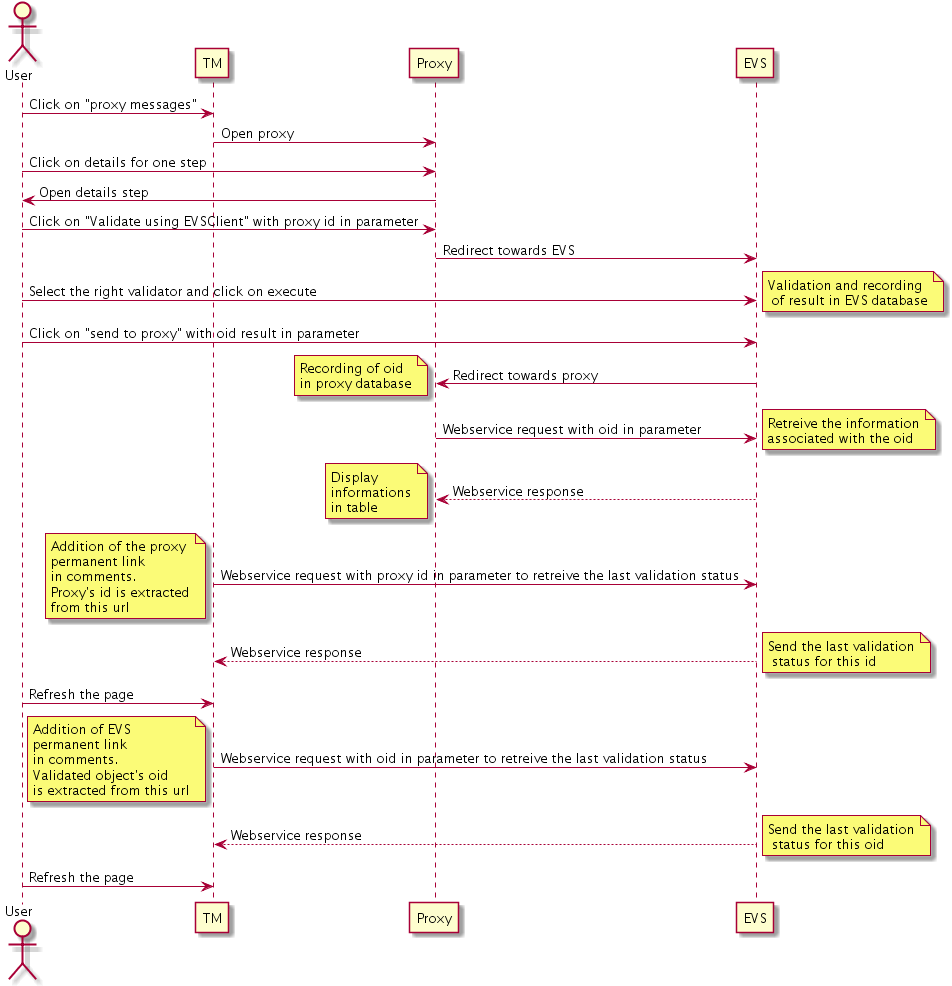

Calling tools

Some tools of the Gazelle testbed send validation requests to the EVSClient. To do so, we use servlets and then we may want to send back the result to the calling tool. We assume that not only one tool will send such requests, we maintain a list of tools which are likely to redirect the user to the EVS Client.

This list is accessible under Administration --> Calling tools.

For each tool, we need an OID which uniquely identify the instance of the tool and the URL used to send back results. Currently two categories of tools can use the EVSClient in this way, Gazelle proxy instances and Gazelle Test Management instances; you need to precise it to the tool so that the EVS Client knows how to send back results (if the feature is available in the calling tool).

Dicom validator installation

Dicom3Tools

We need to install Dicom3Tools :

- sudo apt-get install g++

- sudo apt-get install xutils-dev

Download the last dicom3tools version (http://www.dclunie.com/dicom3tools/workinprogress/) and untar it.

Go in the untar folder.

- ./Configure

- make World

- sudo make World install

Now, you need to make symbolic link in /opt/dicom3tools :

- sudo ln -s /usr/local/bin/dcdump dcdump

- sudo ln -s /usr/local/bin/dciodvfy dciodvfy

- cd ..

- sudo chown -R gazelle:gazelle dicom3tools/

Pixelmed

- Launch a build of http://gazelle.ihe.net/jenkins/job/Pixelmed-jar/

- Download Pixelmed-jar-x.x.x-script.zip (exemple : http://gazelle.ihe.net/jenkins/job/Pixelmed-jar/ws/target/Pixelmed-jar-1.0.1-SNAPSHOT-script.zip)

- sudo mkdir /opt/pixelmed

- sudo chown gazelle:jboss-admin /opt/pixelmed/

- unzip it in /opt/pixelmed/

- edit /opt/pixelmed/bin/pixelmedValidator.sh, add JAVA_HOME={path to java 7 home}

[Deprecated] TM - Test Management

Warning: This documentation is out-dated, newest version of the documentation is available at https://gazelle.ihe.net/gazelle-documentation/Test-Management/user.html

- Log in to post comments

Gazelle Test Management Feature List

The following table summarizes the list of features in the Gazelle Test Management Tool.

| Feature | Bordeaux 2010 |

Pisa 2011 |

Chicago 2012 | Bern 2012 |

| Registration Process Management | ||||

| Users Management | x | x | x | x |

| Contacts Management | x | x | x | x |

| Companies Management | x | x | x | x |

| Contracts Management | x | x | x | x |

| Fees Management | x | x | disabled | x |

| Systems Management | x | x | x | x |

| Test Definition Management | ||||

| Tests Management | x | |||

| Binding to Gazelle Master Model | x | x | x | |

| Test Session Management | ||||

| Configuration management | x | x | x | x |

| Proxy Management | x | disabled | x | |

| Order Manager | ? | x | ||

| Monitor Management | x | x | x | x |

| Test Update Notification | disabled | x | ||

| Goodies | ||||

| Mobile Test Grading for Monitors | x | ? | x | |

| Single Sign On | x | disabled | x | |

Test Management Components

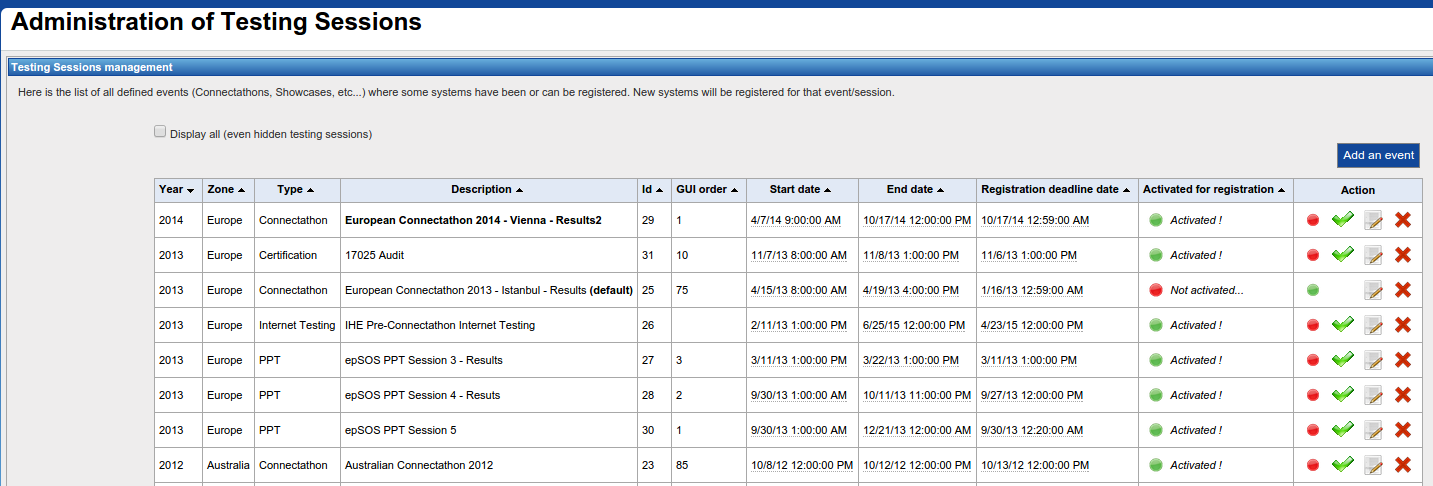

Testing Session Management

Gazelle Test Management can handle multiple testing sessions. The Multiple Test session can be run in parallel. Monitor and Systems register for specific testing session. One can select the coverage of the testing session in terms of Domain and/or Profile. One can also specify the type of testing to be performed in a testing session : Pre-Connectathon, Connectathon, Certification.

Users Management

Module for the management of gazelle users and their roles. Handles the users account management: registration, lost password, user information update. Handles the management of the roles of the users.

Users are associated to an organization. .

Contacts Management

Management of the contacts for a participating company. Allows the participating companies to enter contacts within Gazelle. Contacts are mainly used for administrative purposes. Used to identify the financial contact, the contact for marketing questions and the contact for technical questions.

Contacts are NOT required to have a login.

Organizations Management

Management of all organization relative information. This information is mainly for the purpose of the connectathon management. Users, Contacts and Systems belongs to an organization. The module allows the management of the information about the organization : Address, Contacts, VAT information,... Read more...

Contract Management

Component that manages the creation of the contract for testing session participants. Summarize the registration of the participants and create a pdf document based on a template that can be customized for each testing session. This makes use of the jasperreport library. The link to the jasperreport template for the contract definition in a testing session, is specified within the properties of the testing session. One has the choice to enable or disable it for a given testing session. Read more...

Fees Management

Component for the management of invoice (generation of the invoice, based on a template) and estimation of the amount of the fees to be gathered for an event based on participation. Helpful for connectathon management. Invoice generation is dependent of the testing session and as for the contract based on a template that can be specific to a session. Can be disabled for a given testing session. Read more..

Systems Management

Module for the management of Systems Under Tests (SUT). Manages the registration of the SUT for a testing session. What are the actors, integration profiles and options supported by each SUT. Allow participants to copy system characteristics from one testing session to the other.

Contains a rules engine that checks if the system correctly implements the IHE requirements of dependencies among the actors and profiles.

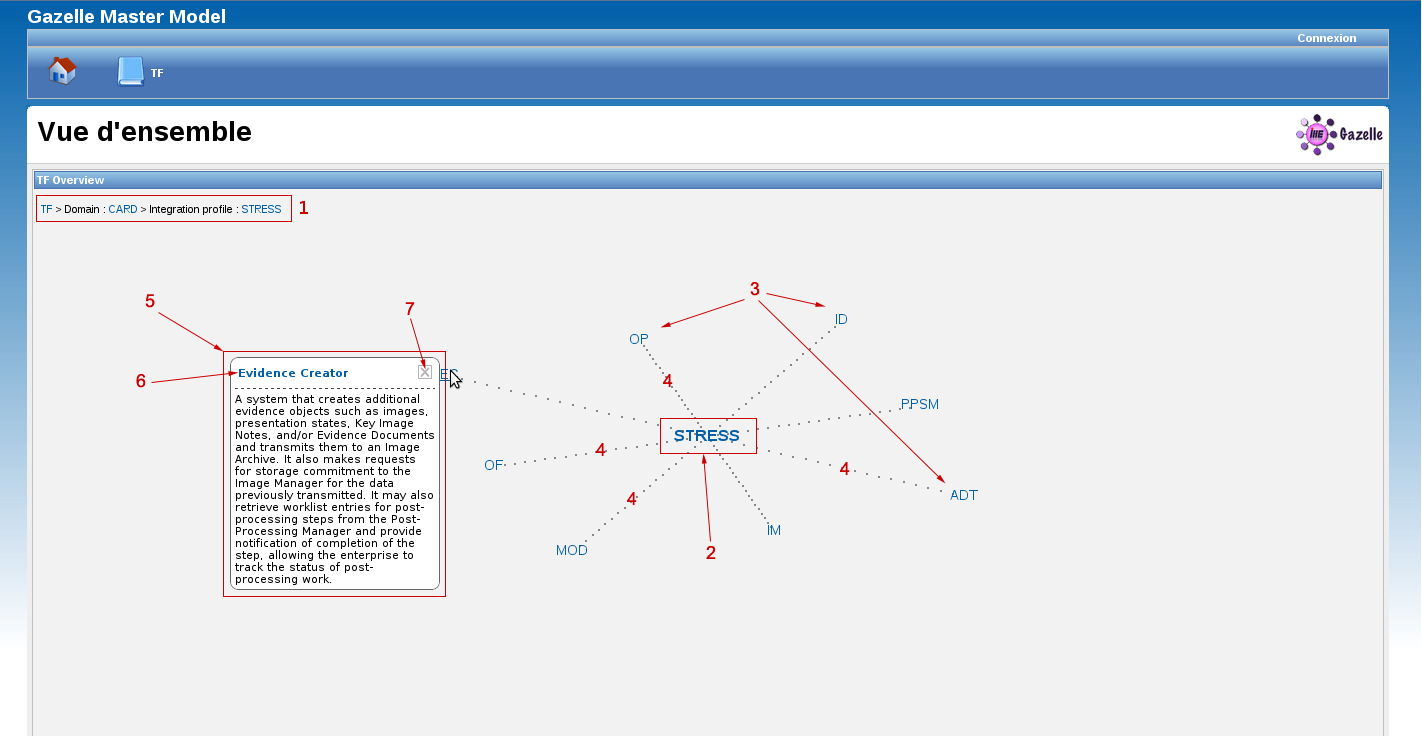

Tests Management

Modules for the definition of the tests. Test requirements per Actor/Profile/Options. Test script definition : Who are the participants ? What are the instructions for the participants ? What are the steps required to perform the test ?

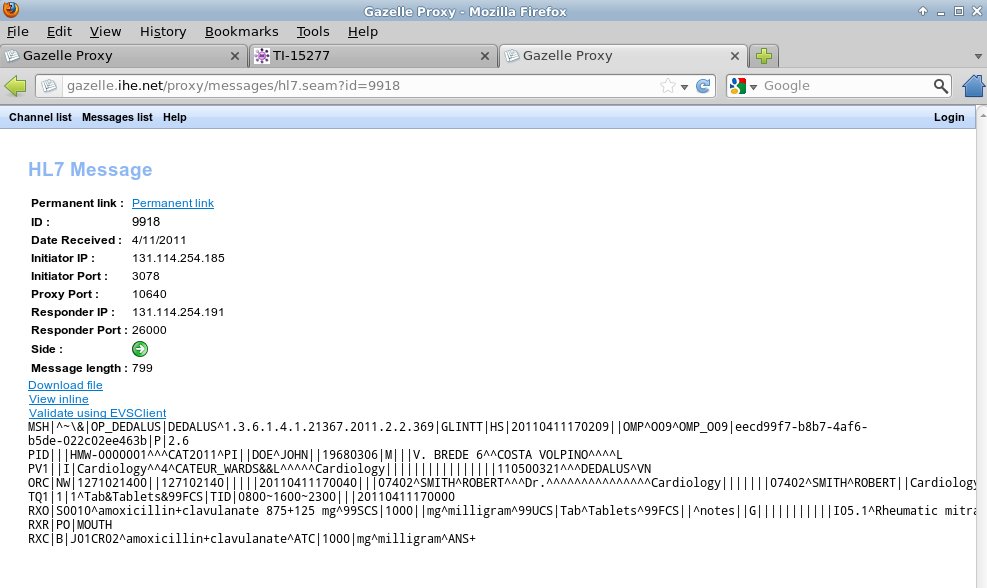

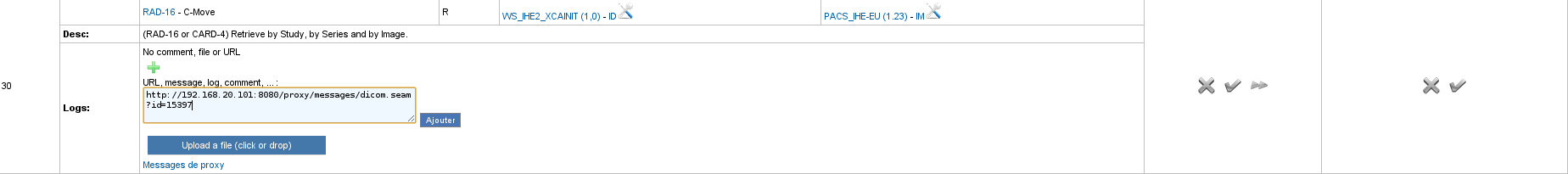

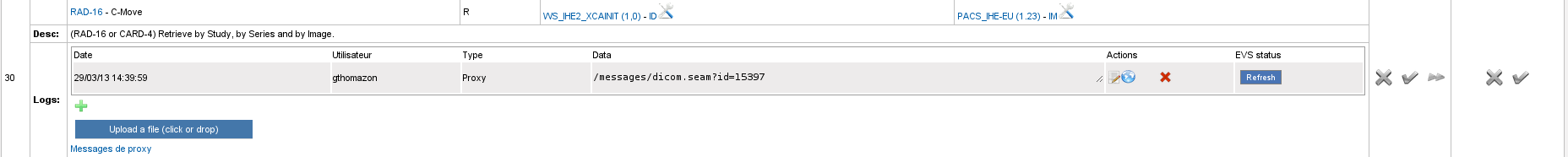

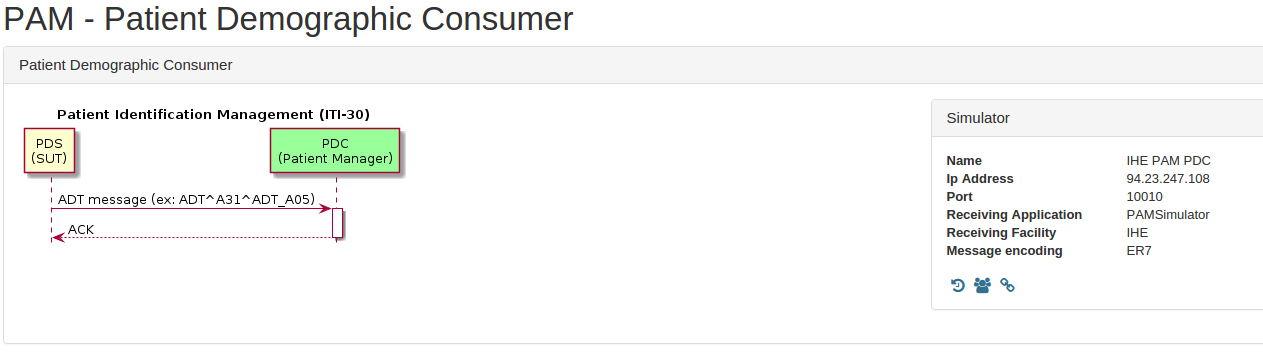

Proxy Management

Interation with the Proxy component. Control of the proxy, instruct the proxy of the interfaces to open and close for testing purposes. Allow participants to link test instance steps to corresponding messages captured by the proxy.

Simulator Management

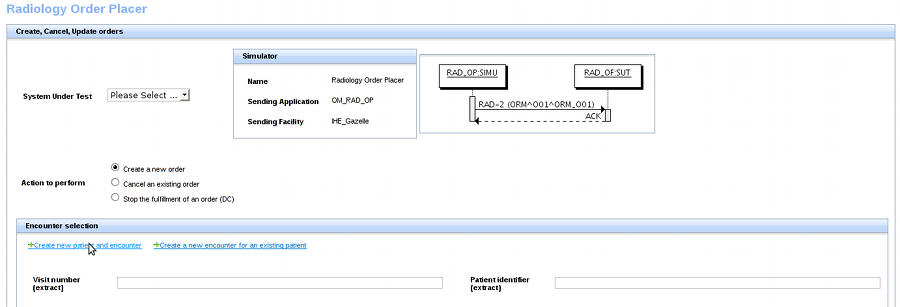

Component for the declaration and the management of the simulators that Gazelle uses to perform the tests.

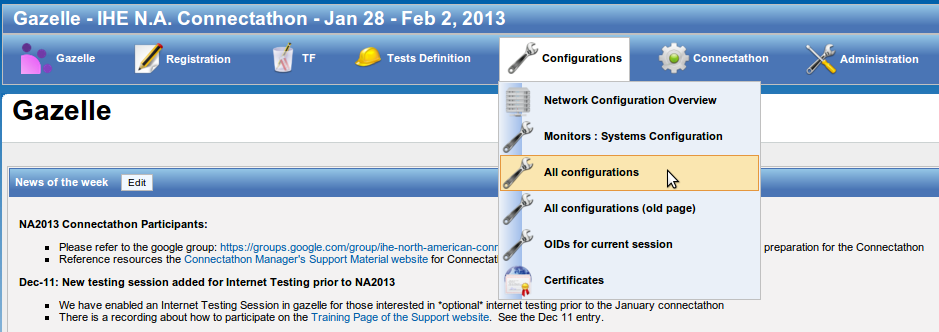

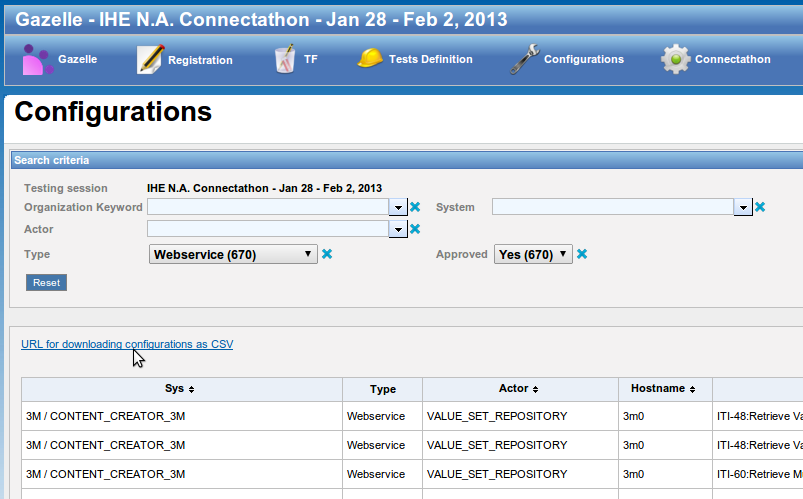

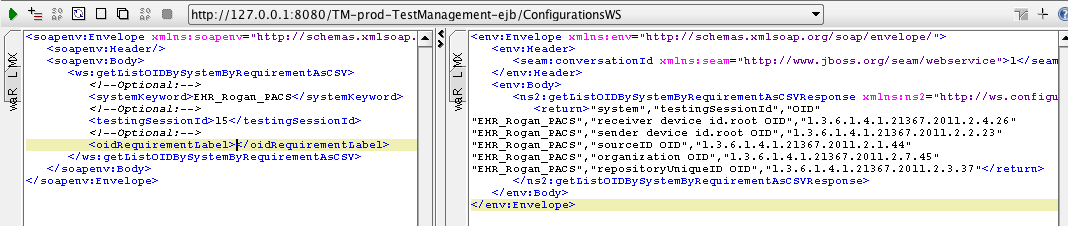

Configuration Management

Management of the configuration of the systems and simulators performing together in a testing session. Knowing that most of the time spend during testing is lost during exchange of configuration parameters, it is essential that Gazelle allows the test participants to correctly and rapidly share configuration informations. That component allows the user to provide the information relative to their own systems. It also allow the test participants to rapidly find the relevant information about their peers in a test.

Monitor Management

Management of the monitors. Monitors are the independent and neutral individuals that check and validate the test performed during a testing session. The component allows the Technical Manager to assign tests to specific monitors. It allows the manager to split the load of test verification among the monitors. Test can be assigned to monitors either test by test, or monitor by monitor

Samples Sharing Management

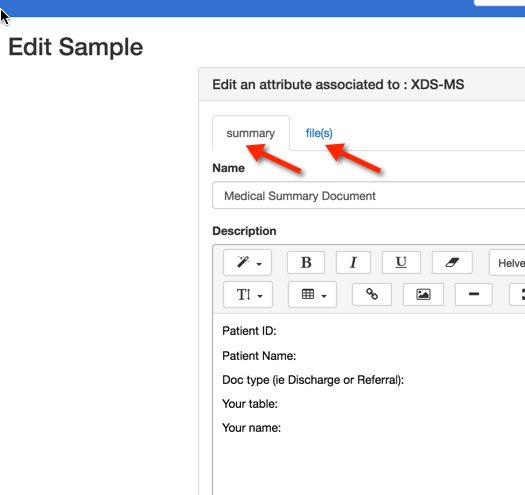

Module for sharing samples. This component allows registered system to share and find samples. Some system need to provide samples, some need to render them. The component allows the participants to easily find the samples relevant for their systems. The sample sharing module is linked to External Validation Services for checking the conformity of the shared samples. The component makes also use of internal validation services

When relevant, the component module allows sample creators to provide a screen capture of the rendering of their samples. It also allows sample readers to provide a screen capture of the rendering of the sample on their system.

A sample and a test can be linked together. This is usefull in the case of scrutiny test. Read more...

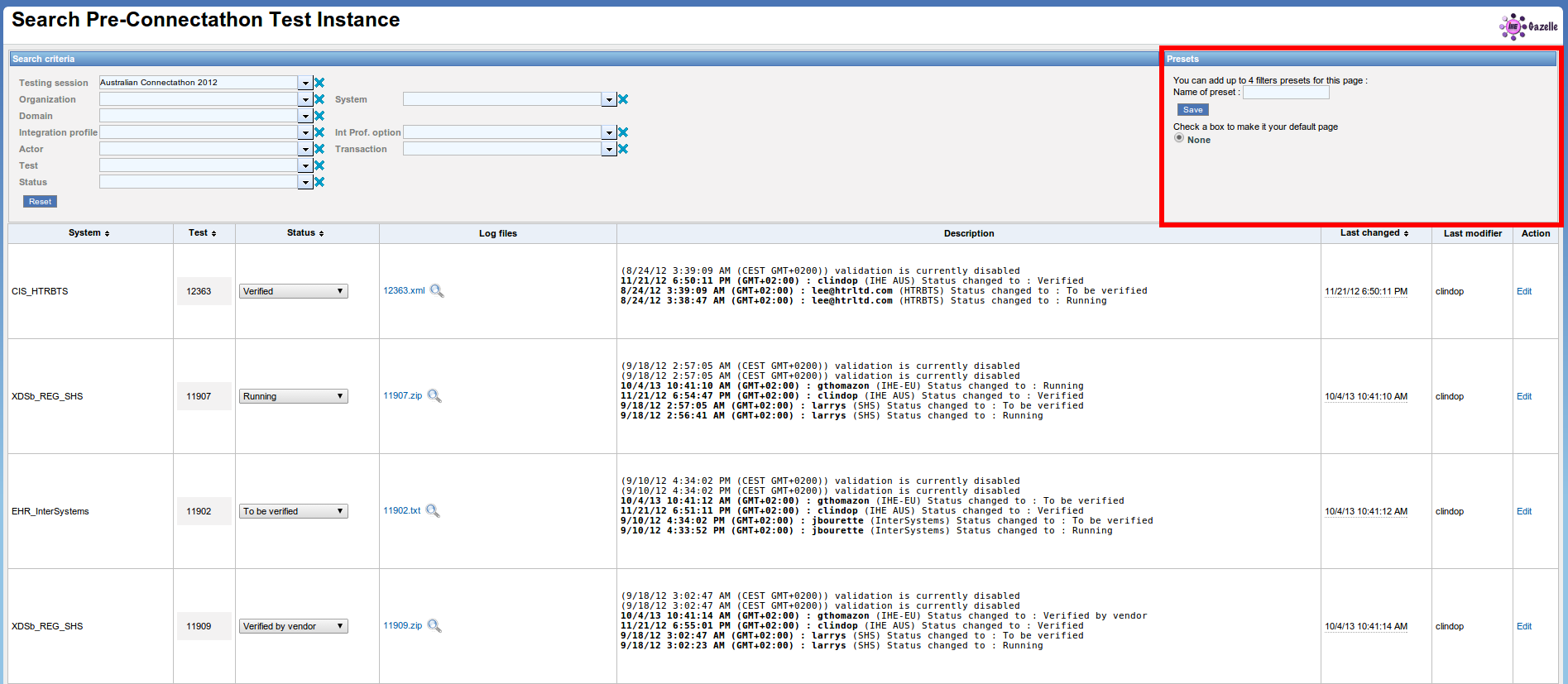

Pre-connectathon Test Management

Allows managers to define the pre-connectathon test : Where to find the tool ? Where to find the documentation ? What actors/profile/options are concerned by the test.

Allows participants to find out what pre-connectathon tests they have to perform, to return corresponding logs and view result of logs grading. Read more...

Connectathon Test Management

Allows the managers to define the tests.

- What is the purpose of the test ? (assertion tested)

- What actors/profile/options are concerned by the test ? (test participants including the cardinality for each participants)

- The scenario of the test (sequence diagram of the interactions between the participants to the test), including the definition of what need to be checked at each step.

- How many instances of the test are required for a SUT to be declared as successfull to the test.

Allows engineers to find out what test need to be performed by their systems.

Allows engineers to start a test and select its participants (peer system under test or simulators)

Connectathon Test Grading

Module to allow the grading of systems participation during the connectathon. Allows the project managers to review test performed a determine success or not of a system to the participation of a specific actor / integration profile / option combination.

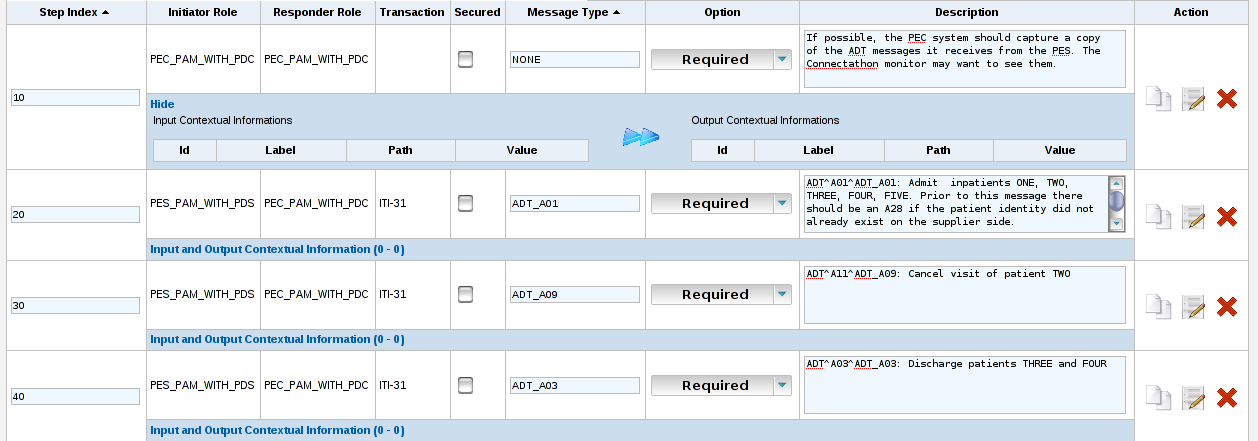

Patient Demographics for Testing Purposes

Module for sharing patients among the participants to a test instance. The module allow generation of simulated patient data using the Demographic Data Server. Generated patient data can be send to a selection of test participants using HL7 V2 or HL7 V3 messages.

Selection of the target is done based on systems configuration as declared in Gazelle. Target can be also configured manually. The component allows the management of multiple patient identification domains. The generated patient are assigned a unique id in the various patient identification domains of the target systems.

Stored data information can be used for validation of messages exchange by test instance participants.

Single Sign On

When enable component of Gazelle Test Management allows user to use a CAS server for their identification. Allows harmonization of the logins among the different applications. All Gazelle simulators also use the CAS login component. JIRA bug tracking system makes also use of it. Read more...

Test Update Notification

The purpose of the Test Update Notification is to reduce the load on the server that is hosting the Gazelle application. It allows connectathon participants to be informed of updates on the test instances that are of concern for them. Instead of refreshing the Gazelle Connectathon Dashboard all the times the participants are informed of new tests instances creation, updates, comments and grading.

Order Manager Binding

This feature is an extension of the Patient Demographic for testing purposes. It allows in addition of the creation of dummy patients, to create encounters and orders for them and send them to test participants. This is useful in the context of preparing the right conditions for a test. All SUT participating to a test can be aware of the same patient, encounter and order. Read more...

Mobile Test Grading for Monitors

This feature add a webservice front end to Gazelle Test Management in order to allow the grading of the test using a mobile device like a WIFI enabled tablet PC (iPad) or a smart phone (iPhone, android...). Monitors can use their mobile device to scan a QR code on the page of test instance to grade and then directly access the page on their device. Read more...

- Log in to post comments

[Deprecated] Gazelle Test Management User Manual

Warning: This documentation is out-dated, newest version of the documentation is available at https://gazelle.ihe.net/gazelle-documentation/Test-Management/user.html

This page indexes the different links to the user manual for the Gazelle Test Management application.

Basic Terminology

Test Types

No Peer

- Test run by your self. There is no need of a test partner to run this type of test

- Used for conformance testing of artefacts (document, messages) that the SUT can produce.

- No peer tests are usualy pre-requisite to Peer to Peer tests.

Peer to Peer

- Test run with one or two partners SUT.

- Test scheduled by SUT manager.

Group Test

- Test run with a larger group of SUT

- Test scheduled by management

Thorough / Supportive

See more information there. The Thorough / Supportive testing mode is defined at registration time.

Thorough Testing

- For those who do not qualify for Supportive Testing. The default testing mode.

Supportive Testing

- For profiles in Final Text

- For Actor / Integration Profile / Option that have been tested by the vendor in a past connectathon

- There must be a published integration statement

- Used for participants who would like to help partners

Test / Test Instance

Test

A definition of a test involving one or more IHE actors in one or more profile.

Test Instance

One instance of a test with specific test partners

Registration Process

During the Registration process, the Gazelle Test Management Application gathers information needed in order for an organization to participate in an upcoming testing session.

To complete registration for a testing session, an organization uses Gazelle to enter:

- User account(s) in Gazelle

- Organization details

- Contact persons in your orgainzation

- System information - the profiles/actors/options that you will test

- Participant information - people who will attend the testing session

When this information is complete, you may be asked to generate a contract in Gazelle for the testing session.

"How to" details for these tasks follow in the pages below.

- Log in to post comments

Registration concepts

Organization

In Gazelle, an organization is the entity that is presenting test systems at the Connectathon.

IHE publishes Connectathon results are per organization, thus, the organization name, address, and finanical contact you enter in gazelle is important.

Users and Contacts

A “User” has a login and password to access the gazelle webtool

A “Contact” is a person in your organization who will interact with us in preparation for the connectathon:

- Financial Contact (only 1)

- Marketing Contact (1 or more)

- Technical Contact (1 or more)

A user may, or may not, be a contact

Users and Contacts belong to an Organization

Users Roles

Two levels of users :

- Vendor admin role

- Approves new user accounts for your organization

- Can edit user and contact information

- Can edit organization info (eg address)

- Can edit all test system & testing details

- Vendor role

- Can edit all test system & testing details

System

In Gazelle, a ‘system’ represents

- a set of IHE profiles, actors and options an organization wants to test.

- Participate in the testing session as the SUT

Testing Session

Gazelle Test Management can manages multiple testing session. A testing sessions may represent:

- Connectathons

- Internet Testing

- Projectathons

When a user logs in to Gazelle, the user is viewing information for a single testing session. Gazelle always "remembers" the last testing session a user was working in. A user may switch between testing sessions. How-to instructions are here.

Creating and managing user accounts for an organization

A "user" is person with a login and password to access the Gazelle Test Management tool. A user in Gazelle is always linked to an organization. A user can only view/modify information for that organization.

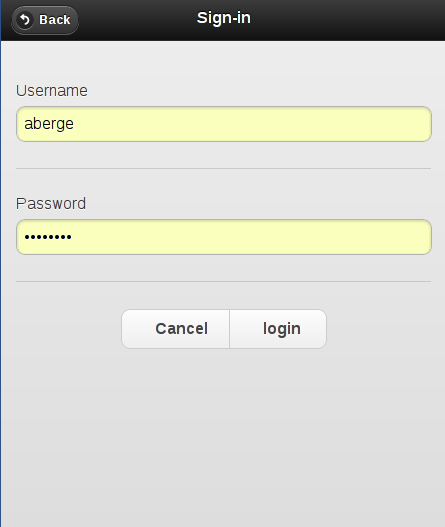

Creating a new user account

To create a new user, go to the home page of Gazelle Test Management and click on the link "Create an account"

Then you need to fill the form with valid information.

If your organization is already entered in gazelle, select it from the "Organization Name" dropdown list; otherwise select "New company" as the "Organization Name" in to create a new organization in Gazelle.

Whn you complete the user information, an email is then sent to you to confirm your registration.

The user account is not activated until a user in your organization with admin privileges in Gazelle activates your account.

User privileges in Gazelle

If you are registered as a user with 'vendor' privileges in Gazelle (the default), you can manage the tests and configurations for your organization's test systems.

If you are registered as user with 'admin' privileges in Gazelle, you are able to :

- manage users related to your organization (activate/de-activate accounts)

- manage contacts to your organization

- manage the testing session participants

- manage contracts and invoice

Managing your organization's user account as a "vendor_admin" user

As an admin user you can manage the users in your organization from Gazelle menu Registration -> Manage users

You can use this form to activate or de-activate user accounts, or update email or password for a user.

Registering an organization

Gazelle gathers information about organizations participating in a testing session. This enables managers of the testing session to contact you about invoicing and other testing session logistics. The organization information is also used when publishing the successful results of a testing session.

Organization information is entered in two scenarios

- If an organization is registering in Gazelle for the first time, a user creating an account in Gazelle is asked to link the account to an organization and enter organization details.

- You can edit the information for your organization through the Gazelle menu Registration --> Manage organization information. The organization information is as follows:

Contacts Management

A "Contact" is a person in your organization who will interact with managers helping you to prepare for the testing session.

An organization must identify:

- Financial Contact (only 1)

- Marketing Contact (1 or more)

- Technical Contact (1 or more)

Note: a "contact" may, or may not, be a person with a "user" account in gazelle

A user with vendor_admin privileges can enter contact information in Gazelle with menu Registration --> Manage Contacts

The page looks like this.

Selec the "Add a contact" button to enter name, email and phone information for the contacts in your organization.

Entering system information -- profile/actor/options to be tested

In Gazelle Test Management, the term "system" refers to a set of application functionaity expressd in terms of IHE profiles, actors, and options. An organization may register one or more systems in a testing session.

The "system" participates in the testing session with peer "systems" from other organizations.

"System registration" is an important step because the profiles/actors/options you select drive much of what happens during the test session. It determines:

- what test tools you will use

- what test you will be required to perform

- who your test partners will be

Starting system registration

First, ensure that you are logged into the Testing Session that you want to register for. The name of the testing session is displayed at the top of the page whenever you are logged into gazelle. You can learn more about testing sessions here.

Next, the system registration page is accessed through Gazelle menu : Registration -> Manage Systems.

On that page you can :

- Add a system : This will create a new system in the Gazelle tool.

- Import systems from another testing session : A click on that button enables you to copy a system from a previous testing session into this new testing session. The new systems contains all of the profiles/actors/options that you registered in the previous session. You will be able to modify them if you wish. The name and the keyword of the system are appended with the string "_COPY" in order to distinguish the new system from the old one.

The instructions below assume you select Add a system.

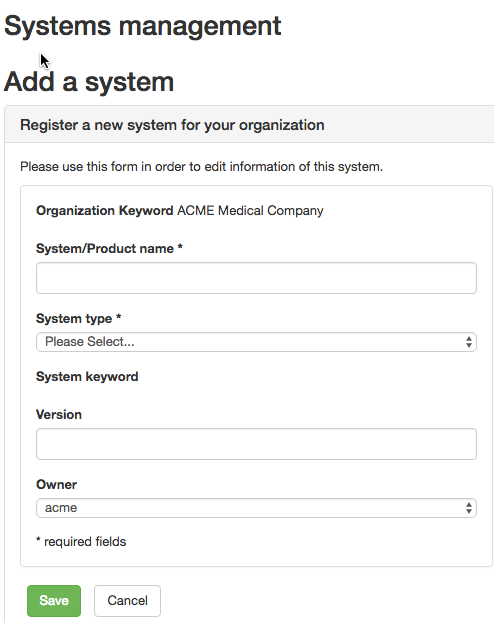

Completing the System Summary

You will be prompted for general information to identify your system:

- System/Product Name - this is how you refer to your system within your organization

- System type - you will select from a drop-down list of labels that are general categorizations of systems under test (eg "PACS", "EHR", "GATEWAY"). There is no right or wrong selection here.

- System keyword - this is how we will refer to your test system during the testing session. This field is auto-filled by Gazelle. It is a combination of the system type you selected and your organization's acronym (eg "EHR_ACME"). You can append a suffix to the gazelle-generated value.

- Version - an optional field that enables you to identify the version of your product

- Owner - this is a user within your organization who will have primary responsibility for managing this system during the test session

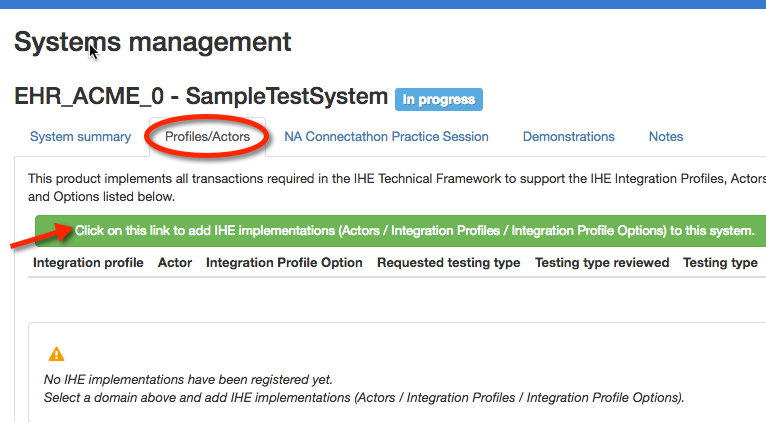

Completing the Profiles/Actors tab

After saving the system summary information, Gazelle creates a System Management page to collet information about your tests system.

The next step is toProfiles/Actors tab and Edit.

Select "Click on this link to add IHE Implementations..."

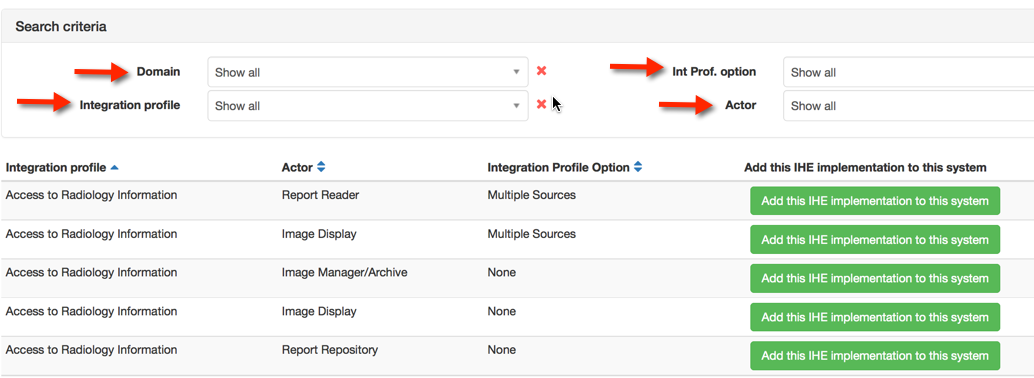

Next, you will use the dropdown lists to select the Domain, Profile, Actor, Option supported by your system. After making your selection, click on "Add this IHE Implementation to this system." You will repeat this procedure for each profile, actor, and option your system supports. Note that the "None" option represents the baseline requirements for that profile/actor without any option.

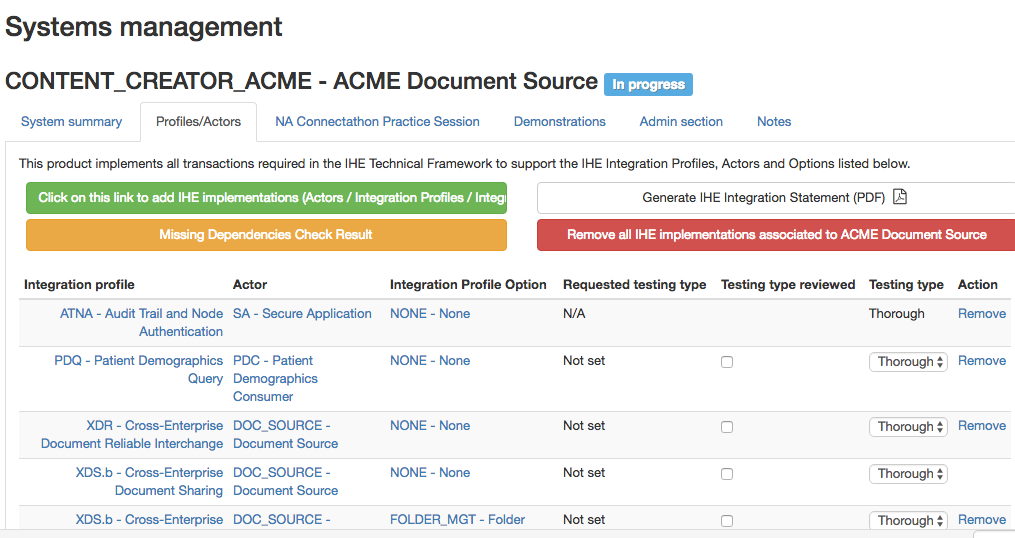

When you have selected the profile, actor, and options for your test system, they will appear on the Profile/Actors tab of your system's registration as follows:

Finishing System Registration

To finish your system registration:

- On the "Profile/Actors" tab, look for the yellow "Missing Depenendencies" button. If it appears, it means that you have registered for a profile/actor that has a dependency on another profile. Select the button to complete registration for those dependencies.

- On the "Profile/Actors" tab, some of the profile/actor pairs you selected may be eligible for "Supportive" testing. "Thorough vs Supportive testing" is explained here.

- On the "System Summary" tab, change the Registration Status from "In progress" to "Completed". This is a signal to the technical manager of your testing session that you have completed your registration for this system

Generating a contract in Gazelle

Introduction to contract generation

The Gazelle Test Management tool is used to generate a contract for some testing sessions.

The contract includes the information you have entered into gazelle during the registration process:

- Organization details

- Contacts in your organization - financial, marketing, and technical

- One or more systems to be tested and several contacts .

If any of these is missing, Gazelle will not let you generate a contract.

To generate your contract

A gazelle user with "vendor_admin" privileges is able to generate a contract. Select Gazelle menu Registration --> Financial Summary

First Level Registration Errors

This section contains common error messages that occur when generating a contract. The error messages in red are generated by Gazelle. The numbers in ( ) are pasted onto the screen capture and refer to notes below the figure.

- The mailing address is missing for the company

- This is for general correspondence with your company.

- Select Registration -> Manage company information

- Under Mailing address, add an address for your company. This is on the right half of the form. Select Click here to add a new address. If you already have entered an address but you were presented with the error message, you need the next step that associates the address as your company mailing address. This first step adds an address to our database, but does not make it your mailing address.

- The address that you entered is presented in a table with the rightmost column an action column. If you have added a second address, you will see a table with two lines. To associate an address as your mailing address, click on the Select link in the appropriate row.

- You will now see the address copied from the right part of the form to the left side of the form, including the country flag. Scroll down and select Save. That should get rid of error (1).

- Missing address for Financial Contact

- This address is for the person in your organization responsible for processing our requests for payment.

- Select Registration -> Manage company information

- Scroll down to the Billing address section. Add an address on the right side of the form; Select Click here to add a new address.

- i) You might already have an address for your company that is the same for thisperson. Great; skip down to iii.

- ii) If you don’t have an address registered for this financial contact or the address of the financial contact differs from the main address, add it now.

- iii) In the table of addresses on the right side of the form, under the Billing address section, click on the Select link in the appropriate row.

- iv) You will now see the address copied from the right part of the form to the left side of the form.

- Scroll down and select the Save button.

- Missing Technical Contacts

- We need to verify that there are one or more staff members in your organization we can contact for testing and other technical details.

- Select Registration -> Manage contacts

- You will see a table of contact points in your organization. That table might be empty or already have members. You can add a contact person with the Add a contact button in the upper right corner. For this error (Missing Technical Contacts), make sure you select the checkbox for Technical.

- If the Technical Contact was already listed in the table but is not indicated as a Technical Contact in the Professional functions column, edit the row for that person (right most column of the table, edit button). Select the checkbox for Technical and do not forget to hit Save.

- We require at least one contact entered in the system for the generation of the contract. You are welcome to enter more technical contacts. This will be useful for organizations that have multiple systems, each with a separate technical contact.

- If you are bringing one system and three engineers, please enter one candidate as your technical contact. That person would be able to answer most technical questions or delegate to someone else. Please do not enter a person who is merely going to contact your engineers and pass along the questions.

- Missing Marketing Contacts

- We need to verify that there are one or more staff members we can contact if we have marketing questions (is there a logo we can use in documentation? What is the exact name to use for your company?).

- Refer to (3) above. Rather than selecting the checkbox for Technical, select the checkbox for Marketing/Commercial.

- The same person can fill the roles for Billing, Technical and Marketing/Commercial. We split them out for those organizations that have separate duties.

- Missing System Information

- If there is missing system information, you have registered zero systems for testing. The purpose of the contract is to explicitly list what you will test and include a computation for fees. You will need to register the system or systems you wish to test and all profile/actor pairs to be tested.

- Select Registration -> Manage systems

- Enter one or more systems.

- Make sure you save the systems.

- Make sure you select the Profile/Actors tab and enter what you want to test. Check for missing dependencies (ATNA requires CT).

- Fill in information if you plan to participate in a Showcase/Demonstration

- Missing Domain Information

- This means you have either entered zero systems, or you have entered systems but included no profile/actor pairs.

- Refer back to (5) for details.

Second Level Errors: Dependencies

Many IHE Integration Profiles have dependencies defined in the documentation. One example is that an ATNA Secure Node is always required to implement the Time Client actor in the Consistent Time profile. When you enter registration information, there is a button available to you labeled Check for Missing Dependencies. Rather than automatically register you, the system gives you this button to let you check when you feel it is appropriate. A box will pop up, list the missing dependencies, and give you the option to click a link to add them on the spot.

When you return to the financial page, one section will list all of your registered systems. The grid will give the system name, domains tested and fee information. One column will also indicate if all dependencies have been resolved. A green icon indicates sufficient registration; a red icon means that a dependency needs to be satisfied.

You cannot generate a contract if there are dependencies to be resolved, so you need to return to the page for managing systems.

- The rules defining dependencies are written in the Technical Frameworks. We transcribe those rules into Gazelle.

- The rules defining dependencies do not identify all combinations that you will find important. For example, the XDS.b profile requires the Document Source and Document Consumer to obtain a consistent patient identifier for an Affinity Domain but does not specify the mechanism. We support that with the PIX and PDQ profiles for Connectathon testing, but do not require that. Participants fail to register for PIX or PDQ and are then surprised to realize they do not have a mechanism to obtain the patient identifier in the Affinity Domain.

Register Participants to Testing Session

This page describe how to register the participants to a Testing Session. Participants to the testing session are the person who will get a badge an will be able to enter the floor where the testing in taking place.

Registration of the participants to the Testing Session can only take place when the registration of the systems is over.

Only the users with the role "vendor_admin" can register participants to the testing session.

One accesses the participants registration page through the menu Registration -> Testing Session Participants

See the illustration below :

There are 3 means to register a participant to a testing session :

- import from users

- import from contacts

- add participant

Import from Users

By selecting the button import from users, one can select the participants to add from the list of registered users for the organization.

Import from Contacts

By selecting the button import from contacts, one can select the participants to add from the list of contacts already declared in Gazelle Test Management tool. Contacts do not need to have a login.

Add Participants

When selecting the button add participants, the users can enter manually all the information about the participant to register.

User Preferences

User preferences are mainly used the GazelleTest Management application to customize some views according to the user's wishes. The main preferences you may want to update are

- The number of results (rows) in table

- Whether or not you want the diagram sequence of a test to be displayed on the Test Instance page. If not, you may save some space in the page, the one will be lighter to load.

User preferences can also be used to communicate some useful pieces of information to monitors and other connectathon participants, such as your photo, the languages you speak...

To configure your own user preferences, you must first log in to Gazelle. Then, on the top right corner of Gazelle, click your username and then select User Preferences (shown below).

This link leads you to your preferences management page. If you never change anything, the page may look something like this.

Preferences on this page:

Change your password

Skype account During the connectathon week, it can be useful to communicate using Skype. Such a field already exists for the system but a monitor for example, who does not own systems may want to "publish" his/her Skype name to speak with other monitors or participants.

Table Label During the connectathon week, you will sit at a table, the one will be localized by everybody thanks to a table label, typically A1, J10... When you sit the first day of the connectathon, fill this value so that other attendees will find you more easily.

Spoken languages The languages you are able to speak.

Hit the Edit button to update those fields. The following panel will be displayed.

When you hit the "plus" icon, a menu is displayed and you can pick up your language(s). If need, hit the "red cross" button in front of a language to remove it from the list. When finish, hit the "finish" button.

When you hit the "add/change photo" button, a panel like the one below is displayed. Clicking on "add" will open your file repository. Select your photo, only PNG and JPG files are allowed. Be careful to do not choose a too large image, all images with a height greater than 150px will be proportionately resized up to 150px (height).

Do not forget to save your changes before leaving this page.

- Log in to post comments

Testing Session

Concept of the Testing Session

Gazelle Test Management can manages multiple testing session. A testing sessions may represent:

- Connectathons

- Internet Testing

- Projectathons

Testing sessions are created by Gazelle Test Management administrators.

When a user logs in to Gazelle, the user is viewing information for a single testing session. Gazelle always "remembers" the last testing session a user was working in. A user may switch between testing sessions.

Selecting a Testing Session

In order to change testing session, log in to Gazelle. The name of your current testing session is displayed at the top of the screen.

To change testing sessions, select the "Switch" button.

Then select the session of your choice by clicking on the check-mark in the right column and press the "Continue" button on the bottom right of the page

The top of the screen displays the name of the testing session that you have selected.

- Log in to post comments

Pre-Connectathon Testing

Testing before IHE Connectathons is a requirement and is achieved using software written by several different organizations

An index to all available tools for testing IHE profiles is provided at the following URL: http://wiki.ihe.net/index.php?title=IHE_Test_Tool_Information

Gazelle helps Connectathon participants manage the pre-connectathon test.

- Based on the system declared list of actors and profiles, Gazelle will identify the list of test that needs to be executed.